Musk spends 4 billion dollars to purchase 100,000 H100 chips, all for training xAI's Grok3! This "Iron Man" of the tech world is not only making waves in the rocket and electric vehicle sectors, but now he's also making a big splash in the AI field.

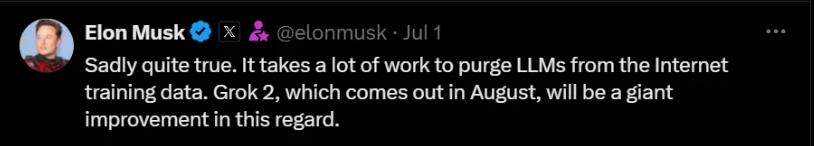

Musk responded twice on Twitter to promote xAI, announcing the release of Grok2 in August and the upcoming Grok3, trained on 100,000 H100 chips by the end of the year. This chip-powered innovative data training aims directly at OpenAI's GPT, aiming to create the next generation of large language models.

Since the release of Grok1.5, Musk has been cheering on AI startup company xAI. Grok is Musk's official entry into the generative AI space, an AI chatbot from a startup xAI that competes with giants like OpenAI, Google, and Meta. Not only do they open-source their work, but they also build supercomputing centers, showing a grand ambition.

Since March this year, xAI has successively launched the large language model Grok1.5 and the first multimodal model Grok1.5Vision. xAI has stated that Grok1.5V "can compete with existing leading multimodal models" in multiple fields such as multidisciplinary reasoning, document understanding, scientific charts, and table processing.

In May, Musk said that as a new company, there is still a lot of preparation work to be done for Grok before it can compete with Google DeepMind and OpenAI. However, xAI has been quietly working hard, focusing on model performance, hoping to put pressure on large companies.

Now, Musk announces that Grok2 will be released in August, marking a significant progress in data training and possibly solving the "centipede effect," where models produce the same output. This is good news for users of large language models.

Musk also revealed that Grok3 will be released by the end of the year, and after being trained on 100,000 NVIDIA H100 GPUs, it will become "something special." This order is valued between 3 to 4 billion dollars, showing Musk's emphasis and investment in AI.

The user scope of xAI's Grok2 and Grok3 is also highly anticipated, with great expectations for the model trained on 100,000 H100 chips. Despite other tech giants like Meta buying more GPUs, xAI's move undoubtedly shows its ambition in the AI field.

Moreover, Musk personally oversees xAI's data center, which uses liquid cooling technology to meet the soaring demand for AI computing. Liquid cooling solutions offer high cooling efficiency, energy conservation, and environmental protection, and have become the preferred choice for AI data centers.