The French independent non-profit AI research lab Kyutai has launched a voice assistant called Moshi, which is a revolutionary real-time local multimodal foundational model. This innovative model imitates and surpasses some of the functionalities demonstrated by OpenAI's GPT-4o released in May in certain aspects.

Product Entry: https://top.aibase.com/tool/moshi-chat

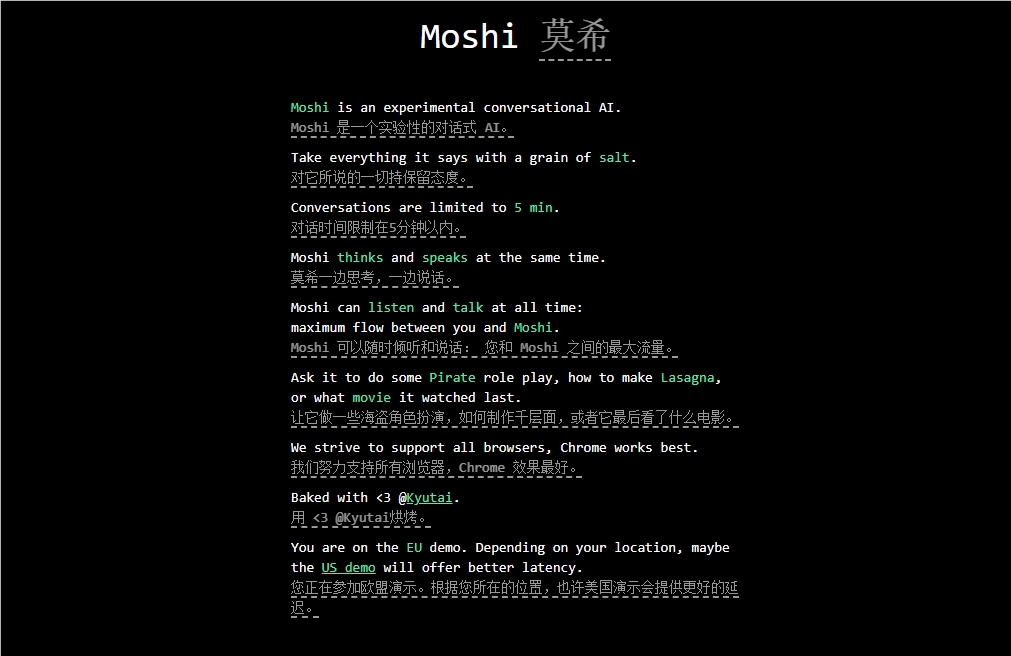

Moshi is designed to understand and express emotions, capable of conversing in different accents, including French. It can simultaneously listen and generate audio and speech while maintaining the smooth conveyance of textual thoughts. It is known that Moshi has various human-like emotions and can speak in 70 different tones and styles.

A notable feature of Moshi is its ability to handle two audio streams simultaneously, allowing it to listen and speak at the same time. This real-time interaction is achieved through joint pretraining on mixed text and audio, utilizing the synthetic text data from Kyutai's 70 billion parameter language model Helium.

The fine-tuning process of Moshi involved 100,000 "spoken style" synthetic conversations converted through text-to-speech (TTS) technology. The model's voice was trained with synthetic data from another TTS model, achieving a remarkable end-to-end latency of 200 milliseconds.

It is worth noting that Kyutai has also developed a smaller variant of Moshi that can run on MacBook or consumer-grade GPUs, making it accessible to a wider range of users.

Highlight: 🔍 Kyutai has released Moshi, a real-time native multimodal foundational AI model.

🔍 Moshi has the functionality to understand and express emotions and supports multiple accents.

🔍 The model has been meticulously fine-tuned and trained, demonstrating efficient performance and diverse application potential.