IBM opened the Granite13B LLM model for enterprise applications in May. Now, Armand Ruiz, the vice president of IBM's AI platform products, has publicly disclosed the full content of the comprehensive 6.48TB dataset used to train Granite13B.

The dataset, after strict preprocessing, was reduced to 2.07TB, a reduction of 68%. Ruiz emphasizes that this step is crucial for ensuring a high-quality, unbiased, ethical, and legally compliant dataset to meet the needs of enterprise applications.

The dataset is carefully curated from multiple sources, including:

- arXiv: Over 2.4 million preprint scientific papers.

- Common Crawl: An open web crawling database.

- DeepMind Mathematics: Math question and answer pairs.

- Free Law: Public domain legal opinions from U.S. courts.

- GitHub Clean: Code data from CodeParrot.

- Hacker News: Computer science and entrepreneur news from 2007 to 2018.

- OpenWeb Text: The open-source version of OpenAI's Web Text corpus.

- Project Gutenberg (PG-19): Free ebooks focusing on early works.

- Pubmed Central: Biomedical and life science papers.

- SEC Filings: 10-K/Q submissions from the U.S. Securities and Exchange Commission (SEC) from 1934 to 2022.

- Stack Exchange: User contributions on the Stack Exchange network.

- USPTO: U.S. patents granted from May 1975 to May 2023.

- Webhose: Conversion of unstructured web content into machine-readable data.

- Wikimedia: Eight English Wikimedia projects.

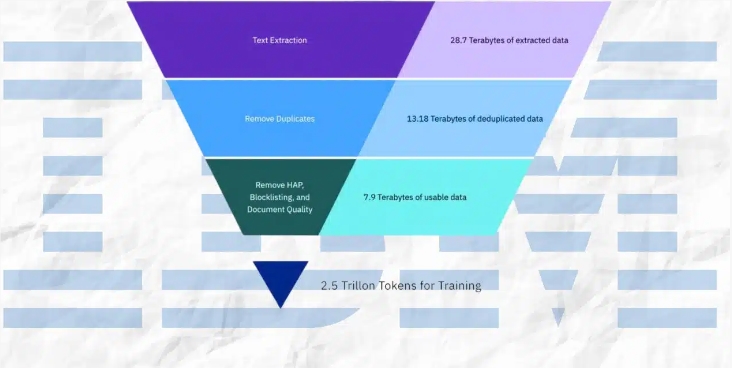

The preprocessing process includes text extraction, deduplication, language recognition, sentence splitting, hate, abuse, and profanity labeling, document quality labeling, URL masking labeling, filtering, and tokenization.

These steps involve annotation and filtering based on set thresholds to ensure that the final dataset is of the highest quality for model training.

IBM has released four versions of the Granite code models with parameters ranging from 3 billion to 34 billion. These models have been tested on a series of benchmark tests and outperformed other comparable models, such as Code Llama and Llama3.

Key points:

⭐ IBM released the complete 6.48TB dataset for training the Granite13B LLM model.

⭐ The dataset was reduced to 2.07TB after strict preprocessing, a reduction of 68%.

⭐ IBM released four versions of the Granite code models with parameters ranging from 3 billion to 34 billion.