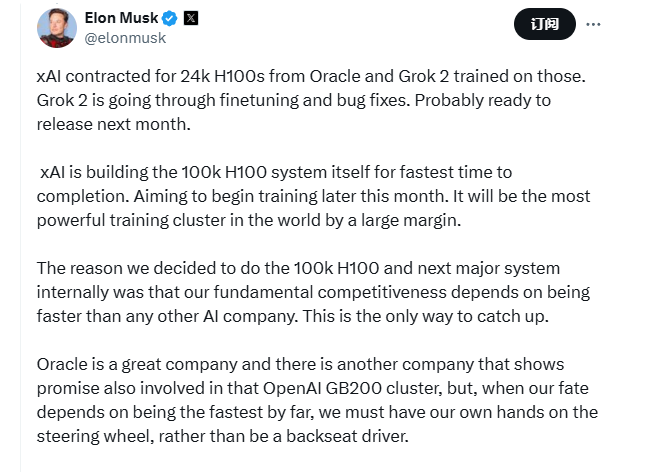

On July 9th, Musk announced that his artificial intelligence company xAI is building a supercomputer with 100,000 NVIDIA H100 GPUs, expected to be delivered and begin training by the end of this month. This move marks the termination of xAI's negotiations with Oracle to expand the existing agreement and rent more NVIDIA chips.

Musk emphasized that this will become "the most powerful training cluster globally with a significant lead." He said that the core competitive edge of xAI lies in speed, "which is the only way to close the gap."

Before this, xAI had rented 24,000 H100 chips from Oracle for computing power to train Grok2. Musk revealed that Grok2 is currently in the final tuning phase and is expected to be released next month at the earliest.

Despite terminating the expanded cooperation, Musk still praised Oracle as "a great company." He stressed that when the fate of the company depends on speed, "we must take the wheel ourselves and not just sit in the backseat talking."

It is noteworthy that there were reports in May of this year that xAI and Oracle were close to reaching a $10 billion expanded cooperation agreement. However, this announcement seems to indicate that xAI is shifting towards an independent strategy of building AI infrastructure.

This decision reflects the fierce competition in the AI field and the crucial role of top computing power resources in this competition. With the deployment of xAI's new supercomputer, the industry will closely monitor its impact on the performance improvement of AI models.