In the field of artificial intelligence, computing power and time have always been key factors limiting technological progress. However, the latest research findings from the DeepMind team provide a solution to this challenge.

They have proposed a new data filtering method called JEST, which significantly reduces AI training time and the demand for computing power by intelligently selecting the best data batches for training. It is claimed that this can reduce AI training time by 13 times and cut the computing power requirement by 90%.

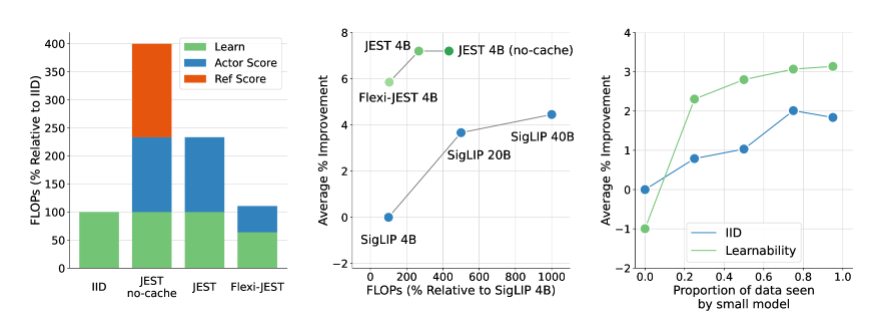

The core of the JEST method lies in the joint selection of the best data batches rather than individual samples, which has proven particularly effective in accelerating multimodal learning. Compared to traditional large-scale pre-training data filtering methods, JEST not only greatly reduces the number of iterations and floating-point operations but also surpasses previous state-of-the-art levels even with only a 10% FLOP budget.

The research by the DeepMind team reveals three key conclusions: selecting good data batches is more effective than selecting individual data points, online model approximation can be used for more efficient data filtering, and small high-quality datasets can be guided to leverage larger unfiltered datasets. These findings provide a theoretical basis for the high efficiency of the JEST method.

The working principle of JEST is to evaluate the learnability of data points by referencing previous research on RHO loss, combining the loss of the learning model and the pre-trained reference model. It selects those data points that are relatively easy for the pre-trained model but relatively difficult for the current learning model, thereby improving training efficiency and effectiveness.

Additionally, JEST employs an iterative method based on blocked Gibbs sampling to gradually build batches, selecting new sample subsets based on conditional learnability ratings in each iteration. This method continuously improves the filtering of more data, including using only pre-trained reference models to rate the data.

This research by DeepMind not only brings breakthrough progress to the field of AI training but also provides new ideas and methods for the future development of AI technology. With the further optimization and application of the JEST method, we have every reason to believe that the development of artificial intelligence will welcome an even broader prospect.

Paper: https://arxiv.org/abs/2406.17711

Highlight:

🚀 **Revolution in Training Efficiency**: DeepMind's JEST method reduces AI training time by 13 times and cuts the computing power requirement by 90%.

🔍 **Data Batch Filtering**: JEST significantly enhances the efficiency of multimodal learning through the joint selection of the best data batches instead of individual samples.

🛠️ **Innovative Training Method**: JEST optimizes the distribution of large-scale pre-trained data and the generalization ability of the model by utilizing online model approximation and guiding high-quality datasets.