ByteDance's large model team has achieved another success with their Depth Anything V2 model being incorporated into Apple's Core ML model library. This achievement not only represents a technical breakthrough but is also remarkable because the project leader is actually an intern.

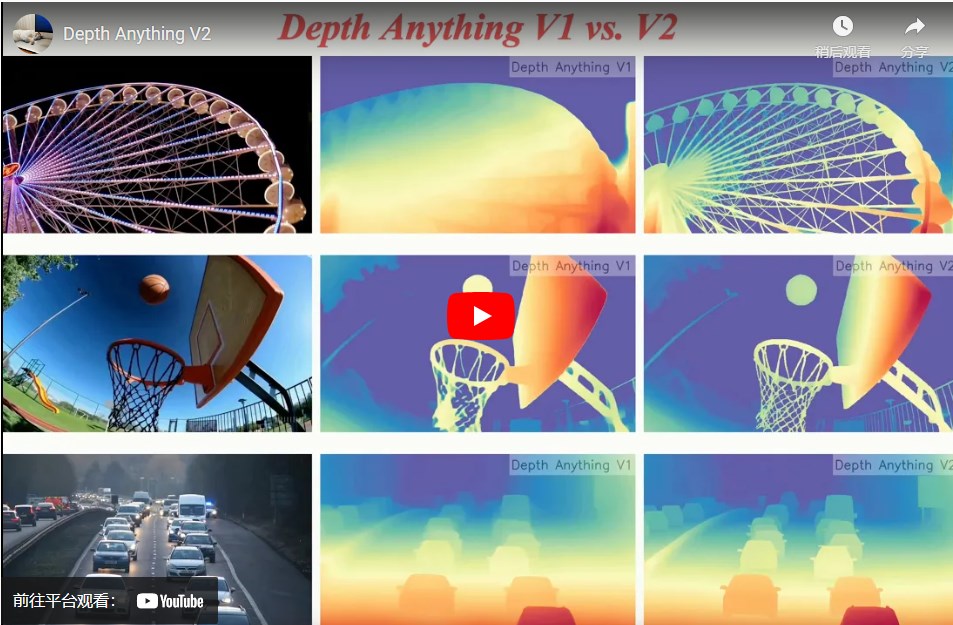

Depth Anything V2 is a monocular depth estimation model capable of estimating scene depth from a single image. This model has expanded from a 25M parameter size in its V1 version at the beginning of 2024 to 1.3B in the current V2, covering applications in video effects, autonomous driving, 3D modeling, augmented reality, and more fields.

The model has already garnered 8.7k stars on GitHub, with the V2 version receiving 2.3k stars shortly after its release and the V1 version accumulating 6.4k stars. This level of recognition is something any technical team would be proud of, especially considering the main force behind it is an intern.

Apple's inclusion of Depth Anything V2 into the Core ML model library is a high recognition of the model's performance and application prospects. As Apple's machine learning framework, Core ML allows machine learning models to run efficiently on iOS, MacOS, and other devices, even when there is no internet connection, enabling complex AI tasks.

The Core ML version of Depth Anything V2 uses a model of at least 25M, which has been optimized by HuggingFace official engineering, achieving an inference speed of 31.1 milliseconds on the iPhone 12 Pro Max. This, together with other selected models like FastViT, ResNet50, and YOLOv3, covers a range of fields from natural language processing to image recognition.

In the wave of large models, the value of Scaling Laws is increasingly recognized by more and more people. The Depth Anything team chose to build a simple yet powerful basic model, achieving better effects on a single task. They believe that solving some basic problems using Scaling Laws is more valuable in practice. Depth estimation, as one of the important tasks in the field of computer vision, is crucial for applications such as autonomous driving, 3D modeling, and augmented reality by inferring the distance information of objects in the scene from images. Depth Anything V2 not only has broad prospects in these fields but can also be integrated into video platforms or editing software as a middleware, supporting features such as special effects creation and video editing. The first author of the Depth Anything project is an intern, and this rising star has completed most of the work in less than a year under the guidance of a Mentor. The company and team provide a free research atmosphere and full support, encouraging interns to delve deeper into more difficult and essential problems.

The growth of this intern and the success of Depth Anything V2 not only demonstrate individual effort and talent but also reflect ByteDance's in-depth exploration in the field of visual generation and large models, as well as talent cultivation.

Project Address: https://top.aibase.com/tool/depth-anything-v2