Anthropic has announced that customers can now fine-tune their latest model, Claude3Haiku, within Amazon Bedrock. This feature allows users to customize the knowledge and capabilities of the model according to their business needs, thereby enhancing the model's effectiveness in specific tasks.

Access: https://aws.amazon.com/cn/bedrock/claude/

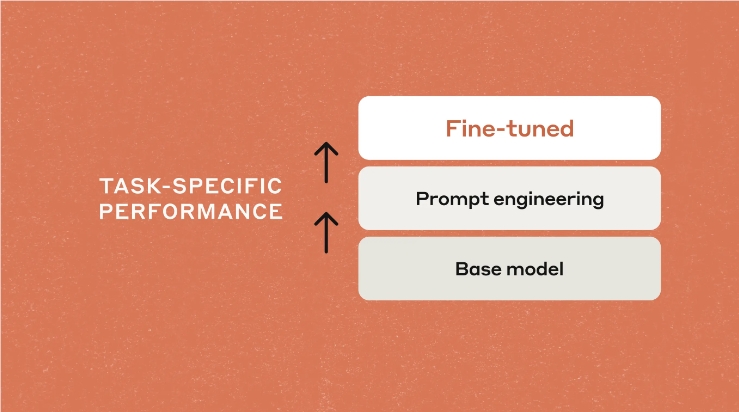

Fine-tuning is a common technique that involves creating a customized version of the model to improve its performance. Users need to prepare a set of high-quality prompt-completion pairs as the expected output. The fine-tuning API is now in preview stage, leveraging these data to generate personalized Claude3Haiku. Users can test and optimize the model through the Amazon Bedrock console or API until the model meets the performance targets and is ready for deployment.

Fine-tuning Claude3Haiku brings about many benefits. Firstly, it achieves better results in specialized tasks, such as classification, interaction with custom APIs, or interpretation of industry-specific data. Through fine-tuning, Claude3Haiku can excel in enterprise key domains, with performance significantly surpassing that of general models. Additionally, fine-tuning can also reduce the cost of production deployment and improve the speed of result returns, ensuring that Claude3Haiku is more efficient than using Sonnet or Opus when in use.

Another advantage is the generation of consistent and brand-formatted outputs, ensuring compliance with regulatory requirements and internal agreements. Moreover, the fine-tuning process does not require deep technical expertise, making it suitable for innovative enterprises of all types. Clients' proprietary data is securely stored within the AWS environment, and Anthropic's fine-tuning technology ensures low-risk harmful outputs for the Claude3 model family.

In practical applications, SK Telecom, one of South Korea's largest telecommunications operators, has improved support workflows and enhanced customer experience by fine-tuning Claude models. Vice President Eric Davis stated that the customization of Claude significantly improved customer feedback rates and key performance indicators, and the fine-tuned model is effective in generating summaries of topics, action items, and customer call logs.

Additionally, global content and technology company Thomson Reuters has also achieved good results. The company is committed to providing accurate, fast, and consistent user experiences in fields such as law, tax, accounting, compliance, government, and media. By optimizing Claude in its areas of industry expertise and specific needs, the company expects significant improvements.

Fine-tuning Claude3Haiku has now entered the preview stage in the US West (Oregon) AWS region. Currently, text fine-tuning is supported, with a maximum context length of up to 32K tokens. Future plans include introducing visual capabilities. For more information, users can refer to AWS' published blog and documentation.

### Key Points:

- 🛠️ **Fine-tuning Feature**: Users can fine-tune the model through high-quality prompt-completion pairs to enhance the model's professional capabilities.

- ⚡ **Cost-effectiveness**: Claude3Haiku is the fastest and most cost-effective model, suitable for use in specialized tasks.

- 🔒 **Data Security**: Clients' proprietary training data remains within the AWS environment, ensuring security and low risk.