Recently, scholars from multiple institutions have discovered a new technology known as Physical Neural Networks (PNNs). This is not the kind of digital algorithms we are familiar with that run on computers, but a completely new, intelligent computing method based on physical systems.

As the name suggests, PNNs utilize the characteristics of physical systems to perform computations in neural networks. Although they are still a niche area of research, PNNs could be one of the significantly undervalued opportunities in modern AI.

Potential of PNNs: Large Models, Low Energy Consumption, Edge Computing

Imagine if we could train AI models 1000 times larger than what we have now and also enable local, private inference on edge devices like smartphones or sensors. This may sound like a scenario from science fiction, but research indicates that it is not impossible.

Researchers are exploring various methods for the large-scale training of PNNs, including those based on backpropagation and those without backpropagation. Each method has its own advantages and disadvantages, and none of them have achieved the same scale and performance as the backpropagation algorithm widely used in deep learning. However, the situation is changing rapidly, and a diverse ecosystem of training technologies offers clues for the exploitation of PNNs.

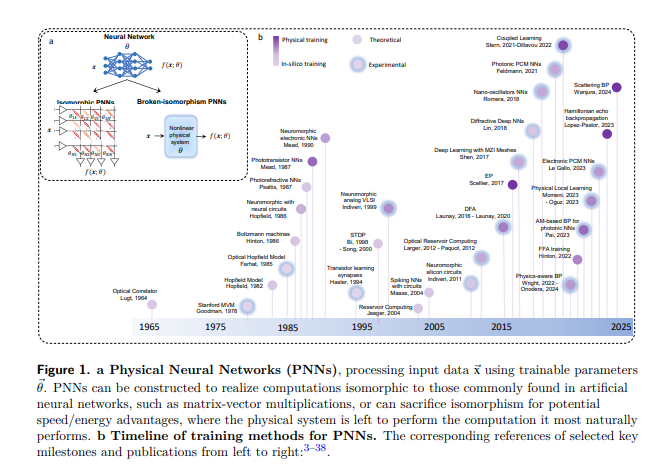

The implementation of PNNs involves multiple fields, including optics, electronics, and neuromorphic computing. They can perform computations such as matrix-vector multiplication in a manner similar to that of digital neural network structures, or sacrifice this structural similarity for potential speed/energy advantages, allowing physical systems to execute their most natural computations.

The Future of PNNs: Outperforming Digital Hardware Performance

The future applications of PNNs could be very broad, ranging from large generative models to classification tasks in smart sensors. They will need to be trained, but the constraints of training may vary depending on different applications. An ideal training method should be model-independent, fast, energy-efficient, and robust against hardware variations, drift, and noise.

Despite the potential of PNNs, it also faces numerous challenges. How to ensure the stability of PNNs during training and inference? How to integrate these physical systems with existing digital hardware and software infrastructures? These are issues that need to be addressed.

Paper link: https://arxiv.org/pdf/2406.03372