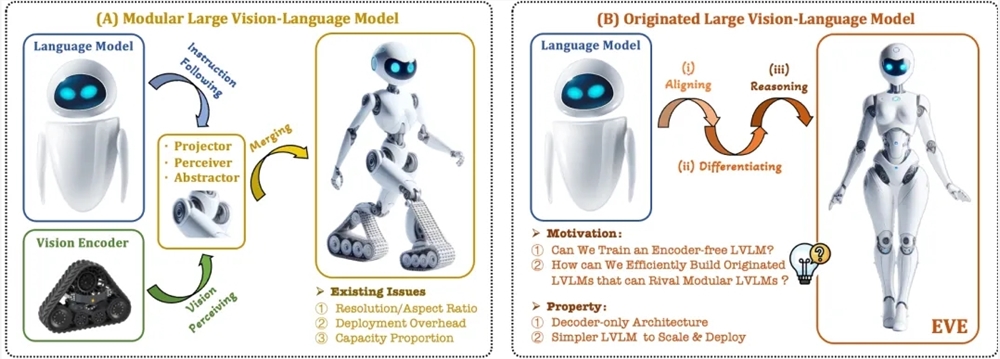

Recently, significant progress has been made in the research and application of multimodal large models. Foreign companies such as OpenAI, Google, and Microsoft have introduced a series of advanced models, and domestic institutions such as Zhipu AI and Jietu Xingchen have also made breakthroughs in this field. These models typically rely on visual encoders to extract visual features and integrate them with large language models, but they face issues such as visual inductive bias due to training separation, limiting the deployment efficiency and performance of multimodal large models.

To address these challenges, the Beijing Academy of Artificial Intelligence (BAAI) has collaborated with Dalian University of Technology, Peking University, and other institutions to develop a new generation of encoder-free visual-language model called EVE. EVE integrates visual-language representation, alignment, and reasoning into a unified pure decoder architecture through refined training strategies and additional visual supervision. Using publicly available data, EVE has demonstrated excellent performance in multiple visual-language benchmark tests, approaching or even surpassing mainstream multimodal methods based on encoders.

Key features of EVE include:

Native Visual-Language Model: Eliminating the visual encoder, it handles arbitrary image aspect ratios and significantly outperforms similar models like Fuyu-8B.

Low Data and Training Costs: Pre-training uses public datasets such as OpenImages, SAM, and LAION, with shorter training times.

Transparent and Efficient Exploration: Provides an efficient and transparent development path for native multimodal architectures with pure decoders.

Model Structure:

Patch Embedding Layer: Obtains image 2D feature maps through a single convolutional layer and an average pooling layer, enhancing local features and global information.

Patch Aligning Layer: Integrates multi-layer network visual features to achieve fine-grained alignment with the outputs of visual encoders.

Training Strategy:

Pre-training Stage Guided by Large Language Models: Establishes an initial connection between vision and language.

Generative Pre-training Stage: Enhances the model's understanding of visual-language content.

Supervised Fine-tuning Stage: Ensures the model follows language instructions and learns dialogue patterns.

Quantitative Analysis: EVE has shown excellent performance in multiple visual-language benchmark tests, comparable to various mainstream encoder-based visual-language models. Despite challenges in accurately responding to specific instructions, EVE achieves performance on par with encoder-based visual-language models through efficient training strategies.

EVE demonstrates the potential of native visual-language models without encoders and may continue to drive the development of multimodal models in the future through further performance improvements, optimization of encoder-free architectures, and construction of native multimodal systems.

Paper Link: https://arxiv.org/abs/2406.11832

Project Code: https://github.com/baaivision/EVE

Model Link: https://huggingface.co/BAAI/EVE-7B-HD-v1.0