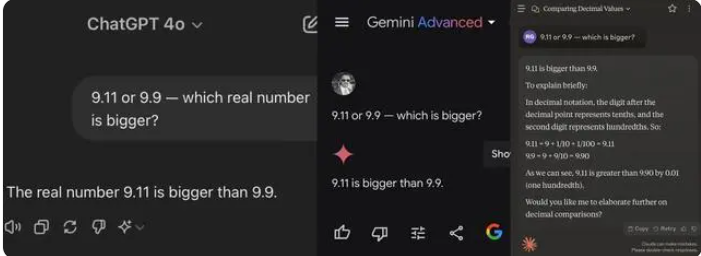

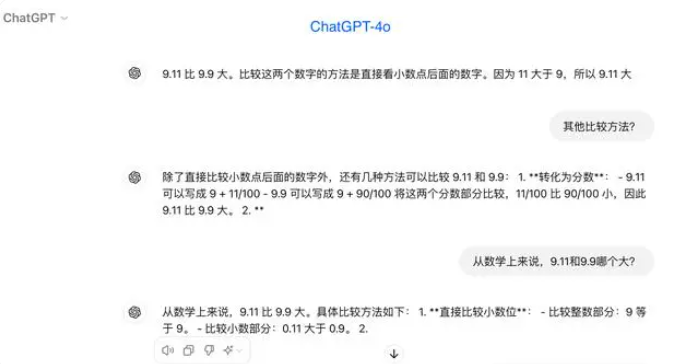

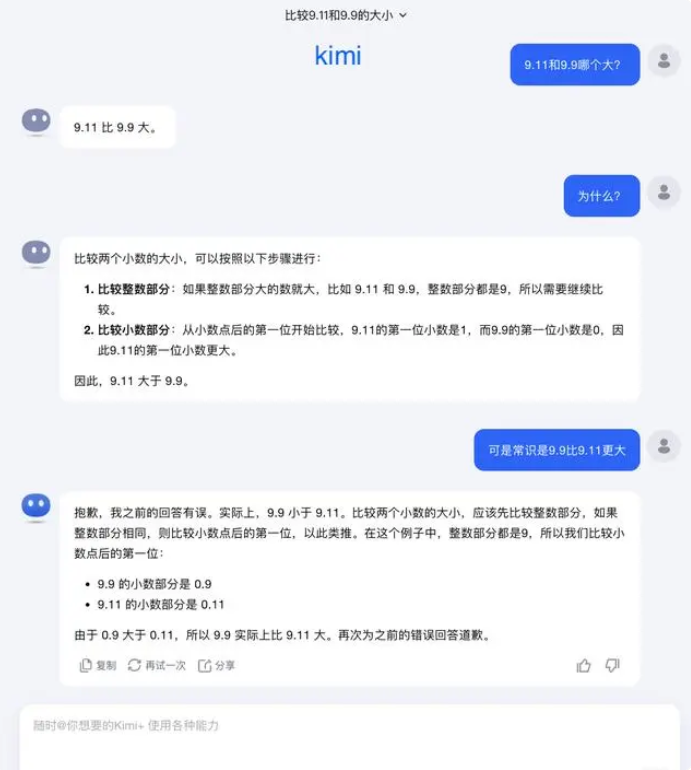

Recently, a simple elementary school math question has stumped many AI large models, with 8 out of 12 internationally renowned AI large models incorrectly answering the question "Which is larger, 9.11 or 9.9?"

During the test, most large models mistakenly believed that 9.11 was greater than 9.9 when comparing numbers after the decimal point. Even under explicit mathematical context constraints, some large models still provided incorrect answers, revealing their shortcomings in mathematical abilities.

Among the 12 large models tested, four models including Alibaba's Tongyi Qianwen, Baidu's Wenxin Yiyan, Minimax, and Tencent's Yuanbao answered correctly. In contrast, ChatGPT-4o, ByteDance's Doubao, the Dark Side of the Moon's Kimi, Zhipu Qingyan, Lingyi Wanzhi, Jietu Xingchen Yuewen, Baichuan Zhihui's Bai Xiaoying, and SenseTime's Shangliang all answered incorrectly.

Some industry insiders believe that the poor performance of large models on mathematical problems may be due to their design being more akin to liberal arts students rather than science students. Generative language models are typically trained by predicting the next word, which makes them excel in processing linguistic data but struggle with mathematical reasoning.

In response to this issue, the Dark Side of the Moon stated: "Our exploration of what large models can and cannot do is still in its very early stages."

"We eagerly anticipate users discovering and reporting more boundary cases, whether it's recent questions like 'Which is larger, 9.9 or 9.11, 13.8 or 13.11?' or previous ones like 'How many 'r's are in 'strawberry'?' These boundary cases help us better understand the capabilities of large models. However, to completely solve the problem, it's not enough to rely solely on fixing each case individually, as these situations are as inexhaustible as scenarios encountered by self-driving cars. Instead, we need to continuously enhance the intelligence level of the underlying foundational models, making large models more powerful and comprehensive, capable of performing well in various complex and extreme situations."

Some experts believe that the key to improving the mathematical abilities of large models lies in training corpus. Large language models are primarily trained on text data from the internet, which contains relatively few mathematical problems and solutions. Therefore, future training of large models needs to be more systematically constructed, especially in complex reasoning.