In the field of artificial intelligence, large language models (LLMs) such as GPT-3 and Llama-2 have made significant strides, capable of accurately understanding and generating human language. However, the vast number of parameters in these models poses challenges in terms of the significant computational resources required for both training and deployment, particularly in resource-constrained environments.

Paper Access: https://arxiv.org/html/2406.10260v1

Traditionally, to achieve a balance between efficiency and accuracy under different computational constraints, researchers have had to train multiple versions of models. For instance, the Llama-2 model series includes variants with 7 billion, 1.3 billion, and 700 million parameters. However, this approach requires substantial data and computational resources, and is not particularly efficient.

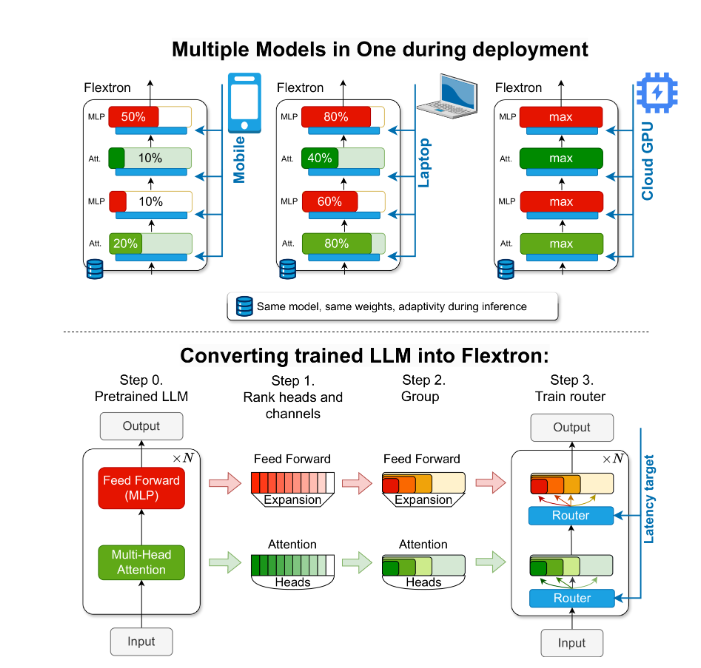

To address this issue, researchers from NVIDIA and the University of Texas at Austin introduced the Flextron framework. Flextron is a novel flexible model architecture and post-training optimization framework that enables adaptive deployment of models without the need for additional fine-tuning, thereby solving the inefficiency of traditional methods.

Flextron converts pre-trained LLMs into flexible models through sample-efficient training methods and advanced routing algorithms. This architecture features a nested elastic design, allowing dynamic adjustments during inference to meet specific latency and accuracy targets. This adaptability makes it possible to use a single pre-trained model in various deployment scenarios, significantly reducing the need for multiple model variants.

Performance evaluations of Flextron show that it outperforms multiple end-to-end trained models and other state-of-the-art elastic networks in terms of efficiency and accuracy. For example, Flextron excelled in multiple benchmark tests including ARC-easy, LAMBADA, PIQA, WinoGrande, MMLU, and HellaSwag, using only 7.63% of the training tokens from the original pre-training, thereby saving a considerable amount of computational resources and time.

The Flextron framework also includes elastic multilayer perceptron (MLP) and elastic multi-head attention (MHA) layers, further enhancing its adaptability. The elastic MHA layers effectively utilize available memory and processing power by selecting subsets of attention heads based on input data, making it particularly suitable for resource-limited scenarios.

Key Points:

🌐 The Flextron framework supports flexible AI model deployment without the need for additional fine-tuning.

🚀 It enhances model efficiency and accuracy through sample-efficient training and advanced routing algorithms.

💡 The elastic multi-head attention layer optimizes resource utilization, making it especially suitable for environments with limited computational resources.

This report aims to introduce the importance and innovation of the Flextron framework in a manner that is accessible to high school students.