In the realm of artificial intelligence, Large Language Models (LLMs) are renowned for their exceptional natural language processing capabilities. However, deploying these models in practical applications faces significant challenges due to their high computational costs and memory footprint during the inference phase. To address this issue, researchers have been exploring ways to enhance the efficiency of LLMs. Recently, a method called Q-Sparse has garnered widespread attention.

Q-Sparse is a simple yet effective method that achieves fully sparse activation in LLMs by applying top-K sparsification in activations and a straight-through estimator during training. This means that efficiency can be significantly improved during inference. Key research findings include:

Q-Sparse offers higher inference efficiency while maintaining results comparable to baseline LLMs.

A novel inference-optimal scaling law for sparse activation LLMs has been proposed.

Q-Sparse is effective across various settings, including training from scratch, continued training of existing LLMs, and fine-tuning.

Q-Sparse is applicable to both full-precision and 1-bit LLMs (e.g., BitNet b1.58).

Advantages of Sparse Activation

Sparsity enhances the efficiency of LLMs in two ways: firstly, sparsity can reduce the computational load of matrix multiplication as zero elements are not computed; secondly, sparsity can decrease the amount of input/output (I/O) transfer, which is a major bottleneck during the inference phase of LLMs.

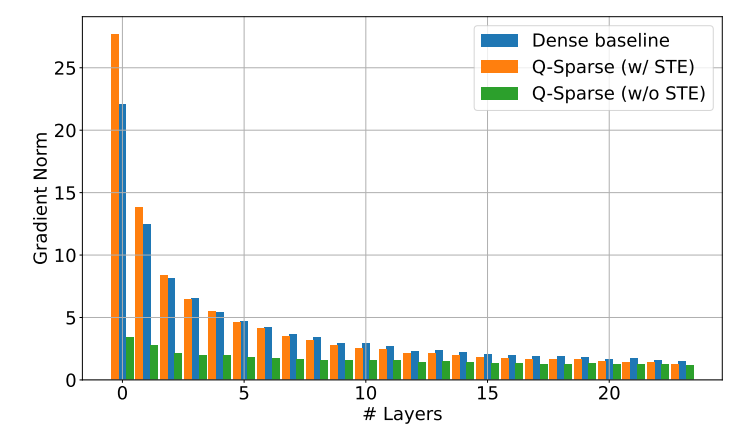

Q-Sparse achieves full sparsity in activations by applying a top-K sparsification function in each linear projection. For backpropagation, a straight-through estimator is used to compute the gradients of activations. Additionally, a squared ReLU function is introduced to further increase the sparsity of activations.

Experimental Validation

Researchers conducted a series of scaling experiments to study the scaling laws of sparse activation LLMs and made some intriguing discoveries:

The performance of sparse activation models improves with the increase in model size and sparsity ratio.

Given a fixed sparsity ratio S, the performance of sparse activation models scales according to a power law with the model size N.

Given a fixed parameter N, the performance of sparse activation models scales according to an exponential law with the sparsity ratio S.

Q-Sparse can be used not only for training from scratch but also for the continued training and fine-tuning of existing LLMs. In the continued training and fine-tuning settings, researchers use the same architecture and training process as in training from scratch, with the only difference being the initialization of the model with pre-trained weights and enabling the sparse function for continued training.

Researchers are exploring the combination of Q-Sparse with 1-bit LLMs (such as BitNet b1.58) and Mixture of Experts (MoE) to further enhance the efficiency of LLMs. Additionally, they are working to make Q-Sparse compatible with batch mode, which will provide more flexibility for the training and inference of LLMs.