Recently, a research team from Microsoft Research and Beihang University has jointly introduced a novel framework called E5-V, aimed at providing a more efficient solution for multi-modal embeddings. With the continuous advancement of artificial intelligence, multi-modal large language models (MLLMs) have become a focal point of research, as they are capable of understanding both textual and visual information simultaneously, thereby better handling complex data relationships. However, effectively representing various types of information remains a significant challenge in multi-modal learning.

Project entry: https://github.com/kongds/E5-V/

Previous models like CLIP, although aligning visual and language representations through contrastive learning, still rely on independent encoders for image and text pairs, resulting in suboptimal input integration. Additionally, these models often require a vast amount of multi-modal training data, which is costly and insufficient in complex language understanding and visual-language tasks.

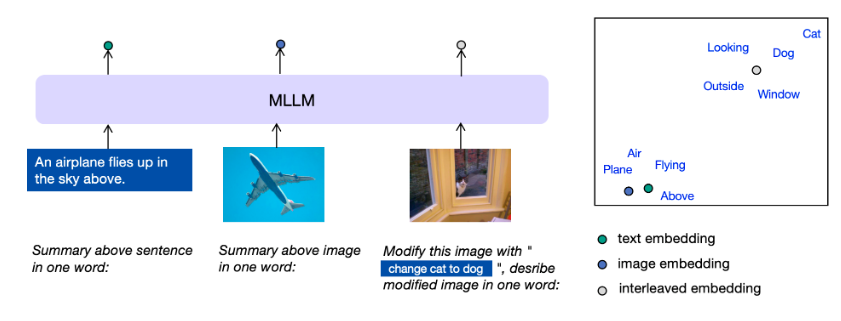

The innovation of the E5-V framework lies in its use of single-modal training, using only text pairs for training, which significantly reduces training costs and avoids the hassle of collecting multi-modal data. During training, the E5-V framework eliminates the modality gap by transforming multi-modal inputs into words. This approach allows the model to more accurately perform complex tasks such as composite image retrieval.

According to the research team's experimental results, E5-V performs exceptionally well on multiple tasks, such as text-image retrieval and composite image retrieval. It excels in zero-shot image retrieval, outperforming the current top model, CLIP ViT-L, with Recall@1 improvements of 12.2% on Flickr30K and 15.0% on COCO datasets.

Furthermore, in the composite image retrieval task, E5-V also surpasses the current state-of-the-art method iSEARLE-XL, with improvements of 8.50% and 10.07% on the CIRR dataset.

The E5-V framework represents a significant advancement in multi-modal learning. By leveraging single-modal training and a prompt-based representation method, E5-V addresses the limitations of traditional methods, providing a more efficient and effective solution for multi-modal embeddings.

Key Points:

🌟 The E5-V framework simplifies multi-modal learning through single-modal training, reducing costs.

📈 E5-V demonstrates superior performance over existing top models in multiple tasks.

🔑 This framework sets a new standard for the development of future multi-modal models, with broad application potential.