Following Meta's announcement of the release of the most powerful open-source model, Llama3.1, yesterday, Mistral AI has proudly introduced its flagship model, Mistral Large2, in the early hours of today. This new product boasts 1230 billion parameters and an expansive 128k context window, which, in this regard, can rival Llama3.1.

Details of the Mistral Large2 Model

Mistral Large2 features a 128k context window and supports dozens of languages, including French, German, Spanish, Italian, Portuguese, Arabic, Hindi, Russian, Chinese, Japanese, and Korean, as well as over 80 coding languages such as Python, Java, C, C++, JavaScript, and Bash.

Designed for single-node inference, Mistral Large2 is primarily aimed at applications with long context - its 1230 billion parameters allow it to run with high throughput on a single node. Released under the Mistral Research License, Mistral Large2 is intended for research and non-commercial use; commercial users need to contact Mistral AI for a commercial license.

Overall Performance:

In terms of performance, Mistral Large2 has set a new benchmark in evaluation metrics, particularly achieving an accuracy of 84.0% on the MMLU benchmark, demonstrating a strong balance between performance and service cost.

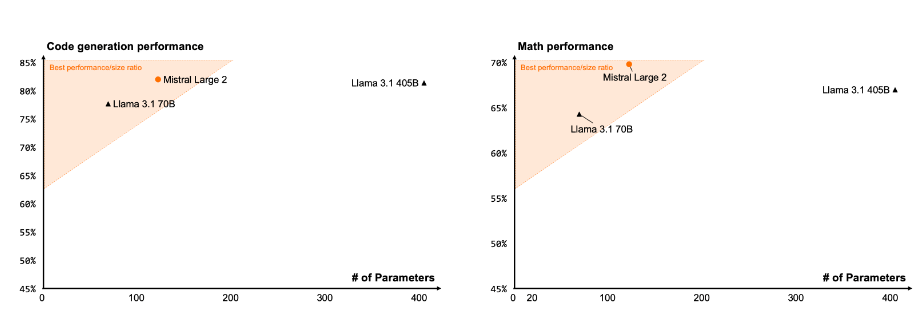

Code and Inference

Drawing on the training experiences of Codestral22B and Codestral Mamba, Mistral Large2 excels in code processing, rivaling top models such as GPT-4o, Claude3Opus, and Llama3405B.

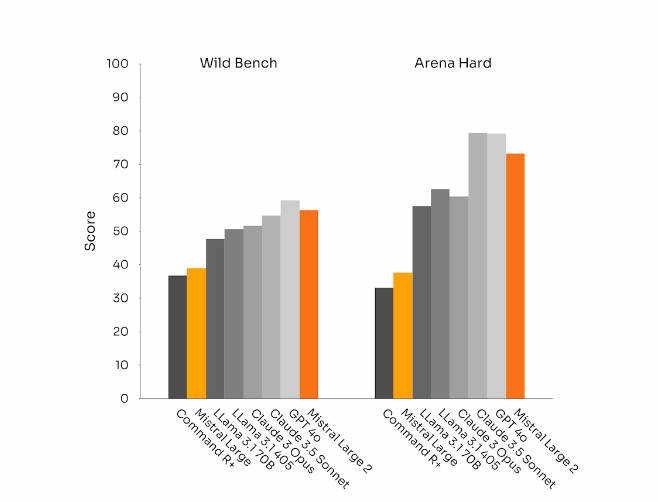

Instruction Following and Alignment

Mistral Large2 has also made significant strides in instruction following and conversational capabilities, especially in handling complex, multi-turn dialogues with greater flexibility. In certain benchmarks, generating longer responses tends to increase scores. However, in many commercial applications, brevity is crucial - shorter model outputs help speed up interactions and are more cost-effective in inference.

Language Diversity

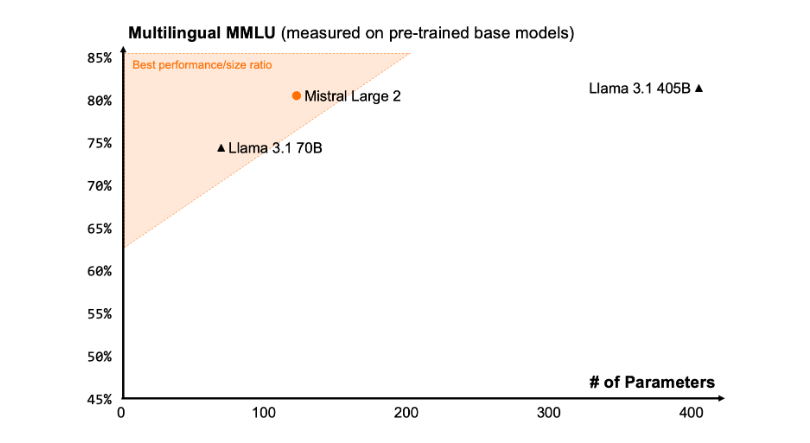

The new Mistral Large2 has been trained on a vast amount of multilingual data, particularly excelling in English, French, German, Spanish, Italian, Portuguese, Dutch, Russian, Chinese, Japanese, Korean, Arabic, and Hindi. Below are the performance results of Mistral Large2 on the multilingual MMLU benchmark, compared with previous models such as Mistral Large, Llama3.1, and Cohere's Command R+.

Tool Use and Function Calling

Mistral Large2 is equipped with enhanced function calling and retrieval skills, and has been trained to adeptly execute parallel and sequential function calls, making it capable of powering complex business applications.

How to Use:

Currently, users can access Mistral Large2 on la Plateforme (https://console.mistral.ai/) under the name mistral-large-2407 and test it on le Chat. It is available under version 24.07 (our YY.MM version control system applied to all models) and the API name mistral-large-2407. The weights for the instruct model are available and also hosted on HuggingFace (https://huggingface.co/mistralai/Mistral-Large-Instruct-2407).

Products on la Plateforme include two general models, Mistral Nemo and Mistral Large, as well as two specialized models, Codestral and Embed. As we gradually phase out older models on la Plateforme, all Apache models (Mistral7B, Mixtral8x7B and 8x22B, Codestral Mamba, Mathstral) are still available for deployment and fine-tuning using the SDKs mistral-inference and mistral-finetune.

Starting today, the product will expand the fine-tuning capabilities on la Plateforme: these features are now available for Mistral Large, Mistral Nemo, and Codestral.

Mistral AI is also partnering with several leading cloud service providers to make Mistral Large2 available worldwide, particularly on Google Cloud Platform's Vertex AI.

**Key Points:**

🌟 Mistral Large2 features a 128k context window and supports up to ten languages and over 80 programming languages.

📈 Achieves an accuracy of 84.0% on the MMLU benchmark, with excellent performance and cost efficiency.

💻 Users can access the new model via La Plateforme and it is widely available on cloud service platforms.