A global study released by cloud security company Checkmarx reveals that despite 15% of companies explicitly prohibiting the use of AI programming tools, nearly all development teams (99%) continue to use these tools. This phenomenon reflects the challenges in controlling the use of generative AI in actual development processes.

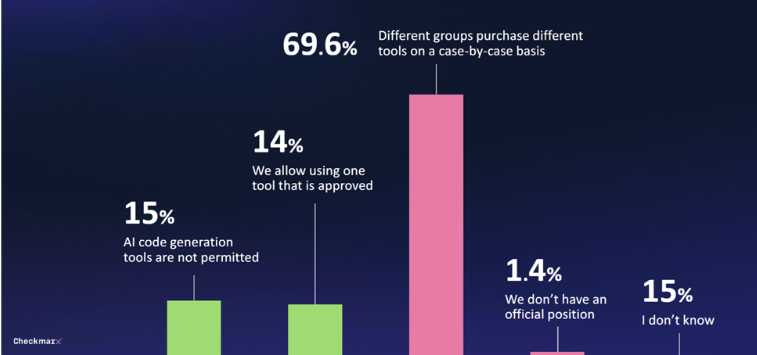

The study found that only 29% of companies have established any form of governance system for generative AI tools. 70% of companies have no central strategy, and procurement decisions by various departments are often ad hoc, lacking unified management.

As more and more developers use AI programming tools, security issues are increasingly drawing attention. 80% of respondents are concerned about the potential threats posed by developers using AI, with 60% specifically worried about the "hallucination" issues that AI might exhibit.

Despite these concerns, many are still interested in the potential of AI. 47% of respondents are willing to allow AI to make code changes unsupervised, while only 6% express complete distrust in AI's security measures in software environments.

Kobi Tzruya, Chief Product Officer at Checkmarx, noted: "Responses from global CISOs reveal that developers are using AI for application development, despite these AI tools being unreliable in creating secure code, meaning security teams must deal with a large volume of newly generated, potentially vulnerable code."

Microsoft's Work Trend Index report also shows that many employees use their own AI tools without provided AI tools, often without discussing this openly, hindering the systematic implementation of generative AI in business processes.

Key Points:

1. 🚫 **15% of companies ban AI programming tools, but 99% of development teams still use them**

2. 📊 **Only 29% of companies have established governance systems for generative AI tools**

3. 🔐 **47% of respondents are willing to allow AI to make unsupervised code changes**