Recently, a research team from the Swiss Federal Institute of Technology in Lausanne introduced a new method called ViPer (Visual Personalization of Generative Models via Individual Preference Learning), designed to personalize the output of generative models based on users' visual preferences.

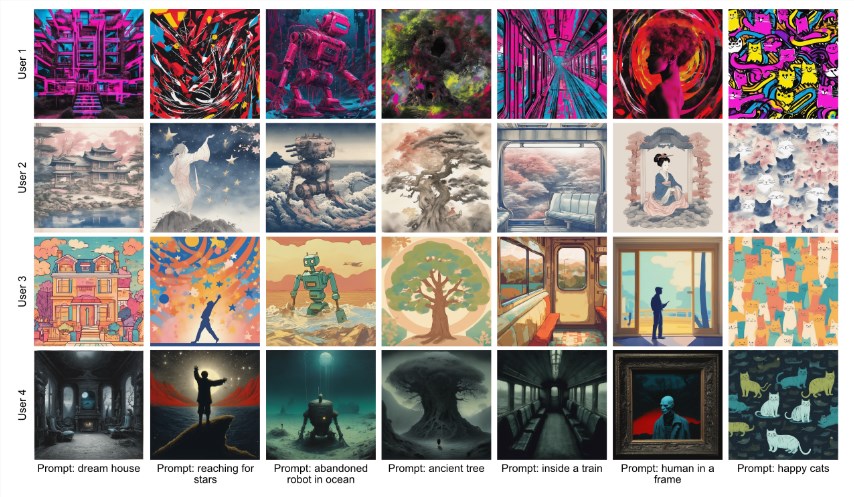

This innovation was showcased at the upcoming 2024 ECCV conference, with the team aiming to enable each user to receive generation results that align more closely with their personal preferences under the same prompts.

Product Entry:https://top.aibase.com/tool/viper

The working principle of ViPer is quite straightforward. Users only need to provide comments on a set of images once, which are used to extract their visual preferences. This allows the generative model to make personalized adjustments without complex prompts, utilizing these attributes to generate images that align with the user's preferences.

In simple terms, this is somewhat similar to the personalized preference feature (--personalize) previously introduced by Midjourney. To use Midjourney's personalized model, users need to have liked or rated images in paired rankings at least 200 times in the past. These likes and ratings help Midjourney learn the user's preferences, allowing the personalized model to more accurately reflect the user's taste.

The project's model has been released on the Huggingface platform, allowing users to easily download and use it. The VPE model in ViPer has been finely tuned to extract individual preferences from a series of images and comments provided by the user.

Additionally, the project offers a proxy indicator model that can predict the preference score for a query image based on images the user likes and dislikes. This means users can better understand their potential preferences for new images.

Furthermore, ViPer provides a proxy scoring mechanism where users can calculate a score for a query image by providing images they like and dislike. This score ranges from 0 to 1, with higher scores indicating greater user preference for the image. The team recommends that each user provide about 8 liked and 8 disliked images to ensure the accuracy of the results.

Key Points:

🌟 ViPer extracts individual visual preferences through a one-time user review, thereby personalizing the output of the generative model.

🖼️ The project's model has been released on Huggingface, allowing users to easily download and use it.

📊 ViPer provides a proxy scoring mechanism to help users predict their preference level for new images.