Recently, Wenyi Yu from the National University of Singapore and their team introduced a new technology called video-SALMONN, which not only comprehends sequences of visual frames, audio events, and music in videos but, more importantly, can understand the speech content within them. This development marks a significant step forward in enabling machines to understand video content.

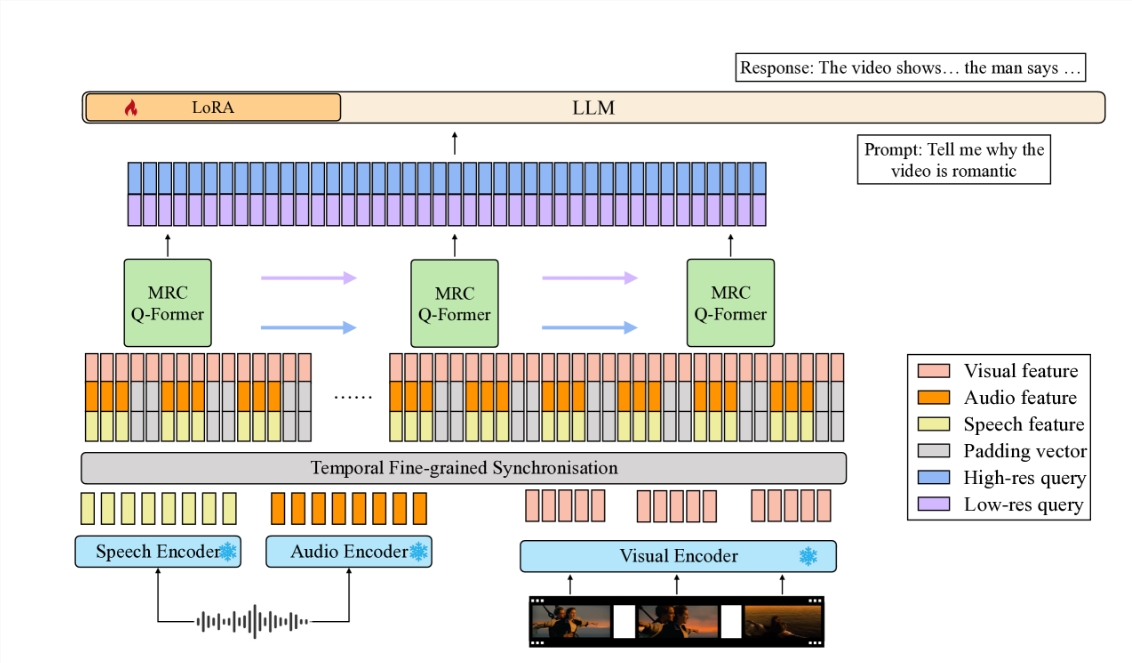

Video-SALMONN is an end-to-end audio-visual large language model (av-LLM), which connects pre-trained audio-visual encoders with the body of a large language model through a novel multi-resolution causal Q-Former (MRC Q-Former) structure. This architecture not only captures the fine-grained temporal information necessary for speech understanding but also ensures efficient processing of other video elements.

To enhance the model's balanced processing of different video elements, the research team proposed specific training methods, including diversity loss and unpaired audio-visual mixed training strategies, to avoid dominance by video frames or modalities.

On the newly introduced Speech-Audio-Visual Evaluation Benchmark (SAVE), video-SALMONN achieved over a 25% absolute accuracy improvement in video question-answering (video-QA) tasks and over a 30% absolute accuracy improvement in audio-visual question-answering tasks involving human speech. Additionally, video-SALMONN demonstrated superior video understanding and reasoning capabilities in tasks that no other av-LLMs have previously accomplished.

At the core of video-SALMONN is the multi-resolution causal (MRC) Q-Former structure, which aligns synchronous audio-visual input features with the text representation space across three different time scales, catering to the varying dependencies of different tasks on video elements. Furthermore, to strengthen the temporal causal relationships between consecutive video frames, the MRC Q-Former includes a causal self-attention structure with special causal masking.

The introduction of video-SALMONN not only brings new research tools to the academic community but also offers extensive possibilities for practical applications. It makes interactions between technology and humans more natural and intuitive, reducing the difficulty for users, especially children and the elderly, to learn and use technology. Additionally, it has the potential to enhance the accessibility of technology for individuals with mobility impairments.

The development of video-SALMONN is a significant step towards achieving Artificial General Intelligence (AGI). By integrating speech input along with existing non-speech audio and visual inputs, such models will gain a comprehensive understanding of human interactions and environments, enabling applications across a broader range of fields.

This technology is undoubtedly set to have a profound impact on the analysis of video content, educational applications, and the improvement of people's quality of life. With continuous technological advancements, we have reason to believe that future AI will be even smarter and more aligned with human needs.

Paper link: https://arxiv.org/html/2406.15704v1