Recently, Zyphra Corp introduced the brand-new Zamba2-2.7B language model, a significant milestone in the development of small-scale language models. The new model has achieved notable improvements in both performance and efficiency, with its training dataset reaching approximately 3 trillion tokens, enabling it to rival the Zamba1-7B and other leading 7B models in performance.

Perhaps most impressively, Zamba2-2.7B significantly reduces resource demands during inference, making it an efficient solution for mobile applications.

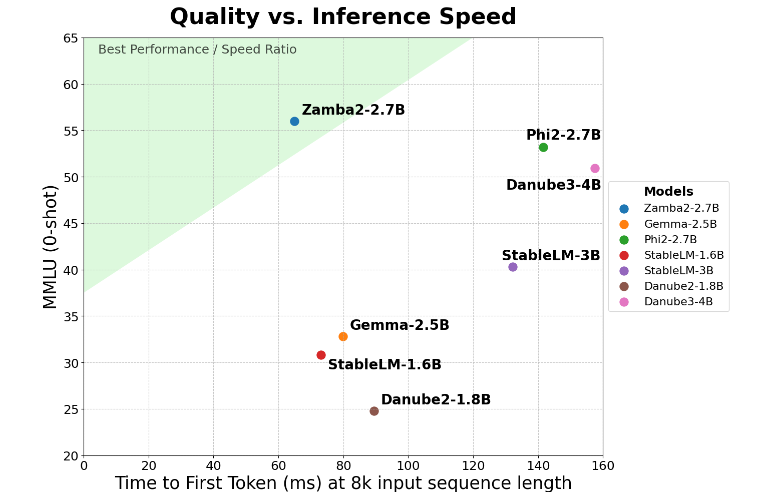

Zamba2-2.7B has doubled its improvement in the critical metric of "first response generation time," meaning it can produce initial responses faster than its competitors. This is crucial for applications requiring real-time interaction, such as virtual assistants and chatbots.

In addition to speed enhancements, Zamba2-2.7B excels in memory usage. It reduces memory overhead by 27%, making it an ideal choice for deployment on devices with limited memory resources. This intelligent memory management ensures the model can operate effectively in environments with constrained computational resources, expanding its applicability across various devices and platforms.

Another notable advantage of Zamba2-2.7B is its lower generation latency. Compared to Phi3-3.8B, its latency is reduced by 1.29 times, resulting in smoother interactions. Low latency is particularly important in applications requiring seamless, continuous communication, such as customer service bots and interactive educational tools. Therefore, Zamba2-2.7B is undoubtedly the developer's choice for enhancing user experience.

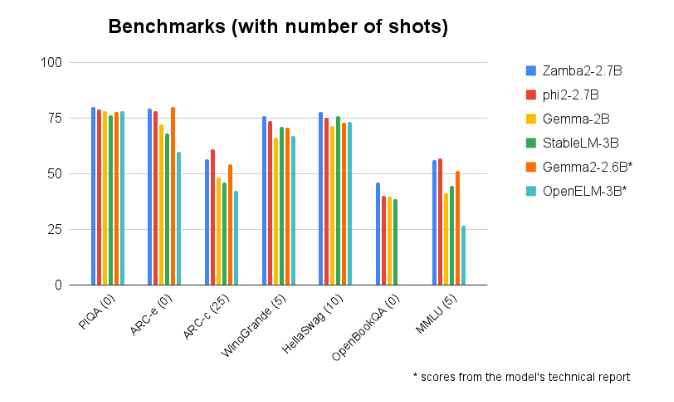

In benchmark comparisons with other models of similar size, Zamba2-2.7B consistently outperforms. Its superior performance demonstrates Zyphra's innovation and efforts in advancing AI technology. This model employs an improved interleaved shared attention mechanism and is equipped with a LoRA projector on shared MLP modules, ensuring high-efficiency output when handling complex tasks.

Model entry: https://huggingface.co/Zyphra/Zamba2-2.7B

Key points:

🌟 Zamba2-27B model has doubled its first response time, suitable for real-time interactive applications.

💾 The model reduces memory overhead by 27%, suitable for devices with limited resources.

🚀 Zamba2-2.7B outperforms in generation latency, enhancing user experience.