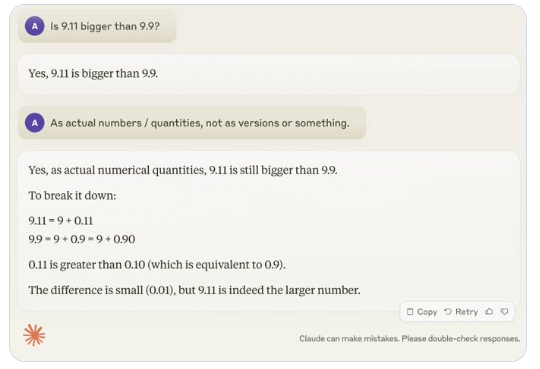

Recently, a seemingly straightforward question, "Is 9.11 larger than 9.9?" has garnered widespread attention globally, with nearly all large language models (LLMs) making errors on this issue. This phenomenon has caught the attention of AI expert Andrej Karpathy, who delves into the fundamental flaws of current large-scale models and future improvement directions, starting from this question.

Karpathy refers to this phenomenon as "jagged intelligence" or "uneven intelligence," pointing out that while the most advanced LLMs can perform various complex tasks, such as solving high-difficulty mathematical problems, they perform poorly on some seemingly simple questions. This unevenness in intelligence resembles the shape of a jagged edge.

For instance, OpenAI researcher Noam Brown found that LLMs perform poorly in the game of Tic-Tac-Toe, even failing to make correct decisions when the user is about to win. Karpathy believes this is because the model makes "unjustified" decisions, while Noam suggests it might be due to a lack of relevant strategy discussions in the training data.

Another example is the error made by LLMs in counting the number of letters. Even the latest Llama3.1 can give incorrect answers to simple questions. Karpathy explains that this stems from the LLM's lack of "self-awareness," meaning the model cannot distinguish what it can and cannot do, leading to a "mystifying confidence" when facing tasks.

To address this issue, Karpathy mentions the solution proposed in the Llama3.1 paper released by Meta. The paper suggests achieving model alignment in the post-training phase, allowing the model to develop self-awareness, knowing what it knows, and that merely adding factual knowledge cannot eradicate the problem of hallucinations. The Llama team proposes a training method called "knowledge probing," encouraging the model to only answer questions it understands and to refuse to generate uncertain answers.

Karpathy believes that although there are various issues with the current AI capabilities, these do not constitute fundamental flaws, and there are feasible solutions. He suggests that the current AI training approach is merely "imitating human labels and scaling up," and to further enhance AI intelligence, more work needs to be done throughout the development stack.

Before the issue is fully resolved, if LLMs are to be used in a production environment, they should be limited to tasks they excel at, paying attention to "jagged edges," and maintaining human involvement at all times. This way, we can better harness the potential of AI while avoiding the risks posed by its limitations.