In the field of 3D digital humans, despite significant advancements, previous methods still faced issues with multi-view consistency and inadequate emotional expressiveness. To address these challenges, a research team from Nanjing University, Fudan University, and Huawei Noah's Ark Lab has made a new breakthrough.

Product Entry: https://nju-3dv.github.io/projects/EmoTalk3D/

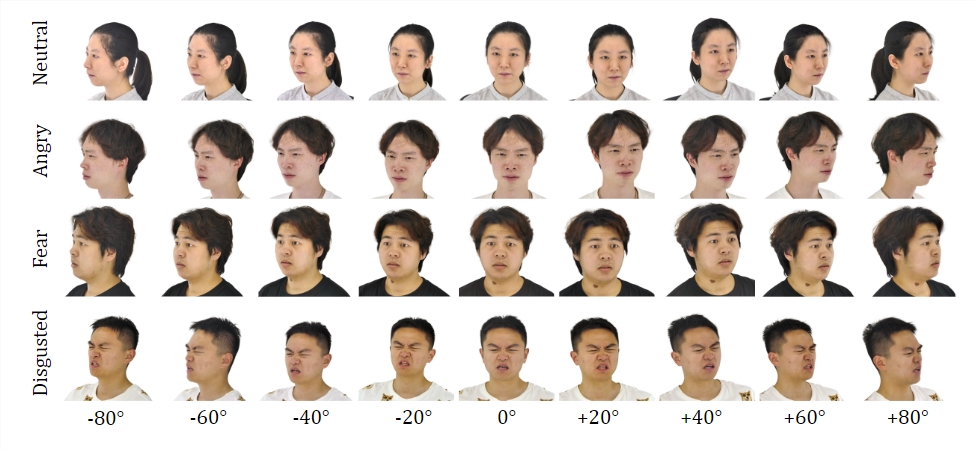

They have collected the EmoTalk3D dataset, which includes calibrated multi-view videos, emotional annotations, and frame-by-frame 3D geometry. They propose a new method for synthesizing 3D talking heads with controllable emotions, significantly improving lip synchronization and rendering quality.

Dataset:

By training on the EmoTalk3D dataset, the research team has constructed a mapping framework from "speech to geometry to appearance." It first predicts realistic 3D geometry sequences from audio features, then synthesizes the appearance of the 3D talking head represented by 4D Gaussians based on the predicted geometry. The appearance is further decomposed into standard and dynamic Gaussians, learned from multi-view videos and fused to render free-viewpoint talking head animations.

The model can achieve controllable emotions in the generated talking heads and render them over a wide range of views. While capturing dynamic facial details such as wrinkles and subtle expressions, it demonstrates improved rendering quality and stability in lip generation. In the example of the generated results, it accurately shows the expressions of happiness, anger, and frustration of the 3D digital human.

The overall process includes five modules:

The first is an emotional content decomposition encoder, which parses content and emotional features from the input speech; the second is a speech-to-geometry network, which predicts dynamic 3D point clouds from the features; the third is a Gaussian optimization and completion module, which establishes a standard appearance; the fourth is a geometry-to-appearance network, which synthesizes facial appearance based on dynamic 3D point clouds; and the fifth is a rendering module, which renders dynamic Gaussians into free-viewpoint animations.

Additionally, they have established the EmoTalk3D dataset, a multi-view talking head dataset with frame-by-frame 3D facial shapes and emotional annotations, which will be made available to the public for non-commercial research purposes.

Key Points:

💥 Introduced a new method for synthesizing digital humans with controllable emotions.

🎯 Constructed a mapping framework from "speech to geometry to appearance."

👀 Established the EmoTalk3D dataset and prepared for public release.