In the current era, the field of Natural Language Processing (NLP) is rapidly advancing, with Large Language Models (LLMs) capable of performing complex language-related tasks with high precision, opening up more possibilities for human-computer interaction. However, a significant issue in NLP is the reliance of model evaluation on human annotations.

Human-generated data is crucial for model training and validation, but collecting such data is both expensive and time-consuming. Moreover, as models continue to improve, previously collected annotations may need updating, reducing their utility in evaluating new models. This necessitates the continuous acquisition of new data, posing challenges for the scalability and sustainability of effective model evaluation.

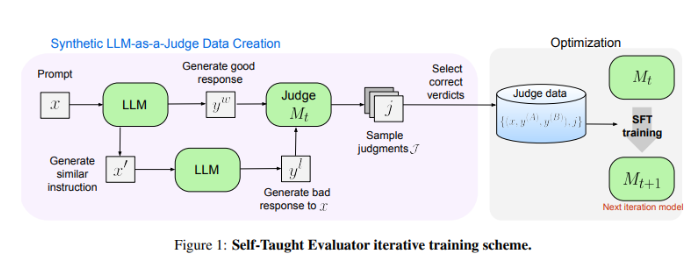

Researchers at Meta FAIR have introduced a novel solution—the "Self-Taught Evaluator." This approach eliminates the need for human annotations by utilizing synthetically generated data for training. It begins by generating contrastive synthetic preference pairs through a seed model, then the model evaluates these pairs and iteratively improves, using its own judgment to enhance performance in subsequent iterations, significantly reducing the dependency on human-generated annotations.

The researchers tested the performance of the "Self-Taught Evaluator" using the Llama-3-70B-Instruct model. This method improved the model's accuracy on the RewardBench benchmark from 75.4 to 88.7, reaching or even surpassing the performance of models trained with human annotations. After multiple iterations, the final model achieved an accuracy of 88.3 in single inference and 88.7 under majority voting, demonstrating its strong stability and reliability.

The "Self-Taught Evaluator" provides a scalable and efficient solution for NLP model evaluation, addressing the challenge of human annotation dependency through synthetic data and iterative self-improvement, thereby advancing the development of language models.

Paper link: https://arxiv.org/abs/2408.02666

Key points:

- 😃 NLP model evaluation relies on human annotations, facing issues of high data collection costs, time consumption, and diminishing utility.

- 🤖 Meta FAIR introduces the "Self-Taught Evaluator," which trains on synthetic data, reducing reliance on human annotations.

- 💪 The "Self-Taught Evaluator" performs exceptionally well, significantly improving model accuracy in tests, with stable and reliable performance.