Recently, Reddit user @zefman conducted an intriguing experiment, creating a platform where various language models (LLMs) engage in real-time chess battles. The aim was to assess these models' performance in an entertaining and effortless manner.

It is well known that these models are not particularly adept at chess, yet @zefman found notable highlights worth observing in this experiment.

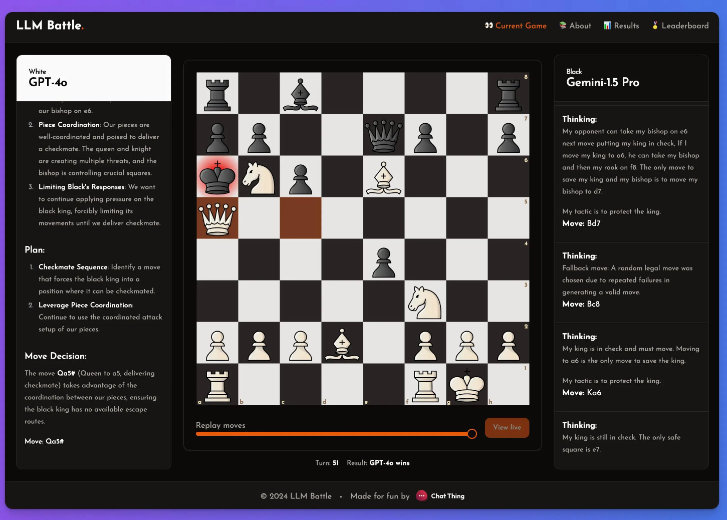

In this experiment, @zefman focused on several of the latest models, with GPT-4o standing out as the strongest contender. He also compared it with other models like Claude and Gemini, noting the intriguing differences in their thought processes and reasoning. Through this platform, viewers can see how each model analyzes the board behind every move.

@zefman's method of displaying the chess positions is quite straightforward. Each model receives identical prompts that include the current board state, the FEN notation, and their previous two moves. This ensures that each model's decisions are based on the same information, allowing for a fairer comparison.

Each model uses the exact same prompt, which updates with the ASCI, FEN board state, and their previous two moves and thoughts. Below is an example:

Additionally, @zefman noticed that in some cases, particularly with weaker models, they might repeatedly choose incorrect moves. To address this, he provided these models with five chances to reselect. If they still could not choose a valid move, a random valid move would be selected to keep the game going.

His conclusion: GPT-4o remains the strongest, defeating Gemini1.5pro in chess.

Key Points:

🌟 GPT-4o excels, becoming the strongest language model in the experiment.

♟️ The experiment allows different models to play chess in real-time, analyzing their thought processes.

🔄 Weaker models sometimes choose incorrect moves, providing an interesting interactive experience.