In the realm of artificial intelligence, there exists a unique group of "painters"—the hierarchical structures within Transformer models. They are akin to magical brushes, painting a rich and diverse world on the canvas of language. Recently, a paper titled "Transformer Layers as Painters" has provided a new perspective on understanding the working mechanisms of the intermediate layers in Transformers.

As the most popular large-scale language model currently, the Transformer model boasts billions of parameters. Each layer, like a painter, collaborates to complete a grand linguistic painting. But how do these "painters" work together? What distinguishes their "brushes" and "paints"? This paper attempts to answer these questions.

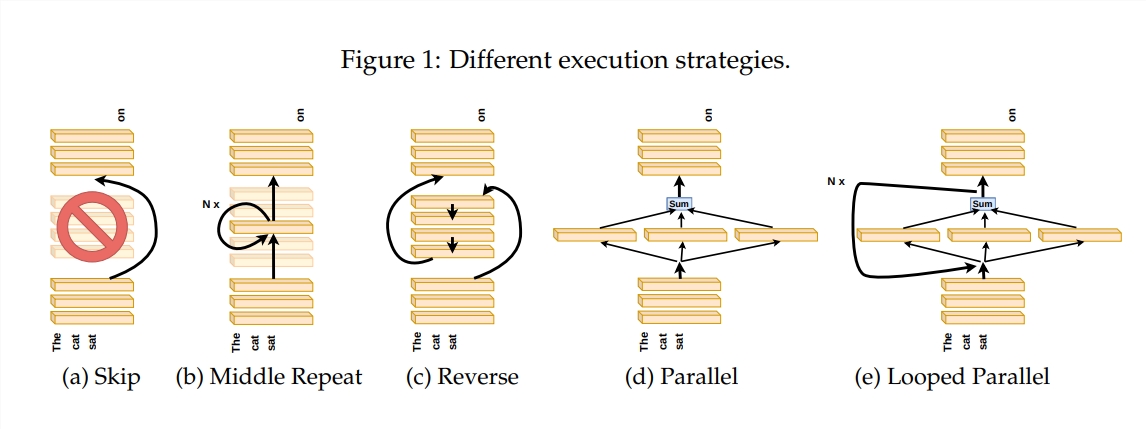

To explore the working principles of Transformer layers, the authors designed a series of experiments, including skipping certain layers, changing the order of layers, or running layers in parallel. These experiments are akin to setting different painting rules for the "painters" to see if they can adapt.

In the metaphor of a "painter's assembly line," the input is viewed as a canvas, and the process through the intermediate layers is like the canvas moving along the assembly line. Each "painter," or each layer of the Transformer, modifies the painting according to its expertise. This analogy helps us understand the parallelism and adjustability of Transformer layers.

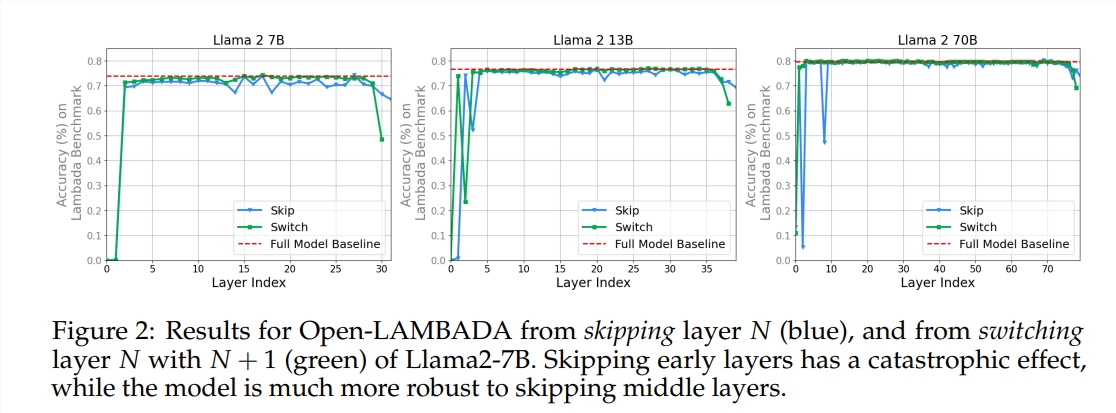

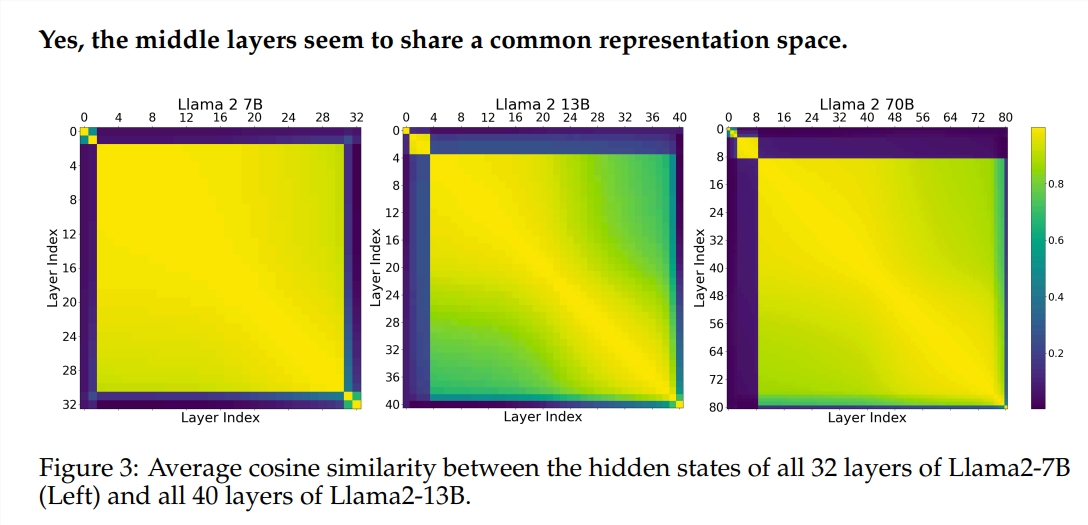

The experiments used two pre-trained large language models (LLMs): Llama2-7B and BERT. The study found that the "painters" in the intermediate layers seem to share a common "paintbox"—the representation space, which differs from the first and last layers. Skipping certain intermediate "painters" had little impact on the overall painting, indicating that not all "painters" are essential.

Although the intermediate "painters" use the same "paintbox," they depict different patterns on the canvas with their unique skills. Simply repeating the techniques of a particular "painter" would diminish the original charm of the painting.

For tasks requiring rigorous logic, such as mathematics and reasoning, the order of "painting" is particularly important. For tasks relying on semantic understanding, the impact of order is relatively minor.

The research results indicate that the intermediate layers of Transformers exhibit a degree of consistency but are not redundant. For mathematical and reasoning tasks, the order of layers is more important than for semantic tasks.

The study also found that not all layers are necessary; intermediate layers can be skipped without catastrophically affecting model performance. Additionally, although intermediate layers share the same representation space, they perform different functions. Changing the execution order of layers leads to a decline in performance, indicating that order significantly impacts model performance.

In the quest to explore and optimize Transformer models, many researchers are attempting various methods, including pruning and reducing parameters. These efforts provide valuable experiences and insights into understanding Transformer models.

Paper link: https://arxiv.org/pdf/2407.09298v1