In a digital world dominated by data and algorithms, every step of artificial intelligence's growth hinges on a crucial element—the checkpoint. Imagine training a large language model that can read minds and converse fluently. This model, incredibly smart yet voracious, requires vast computational resources to "feed" it. During training, a sudden power outage or hardware failure could lead to significant losses. Here, checkpoints act like a "time machine," allowing everything to revert to the last safe state and continue unfinished tasks.

However, this "time machine" itself requires meticulous design. Scientists from ByteDance and the University of Hong Kong have introduced a novel checkpoint system—ByteCheckpoint—in their paper "ByteCheckpoint: A Unified Checkpointing System for LLM Development." This system is not just a simple backup tool but a device that significantly enhances the efficiency of large language model training.

First, we need to understand the challenges faced by large language models (LLMs). These models are "large" because they need to process and memorize vast amounts of information, leading to high training costs, significant resource consumption, and weak fault tolerance. A single failure could result in lengthy training efforts being wasted.

Checkpoint systems act like snapshots of the model, regularly saving its state during training. This allows for quick recovery to the most recent state in case of issues, reducing losses. However, existing checkpoint systems often suffer from low efficiency due to I/O bottlenecks when dealing with large models.

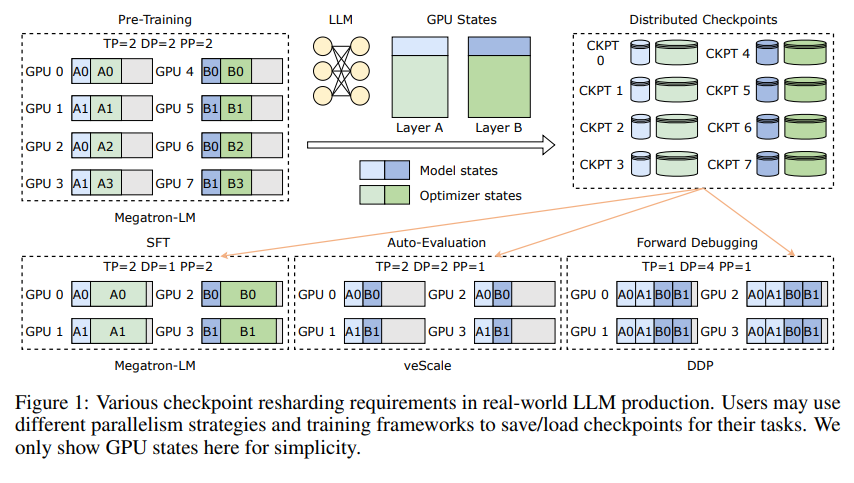

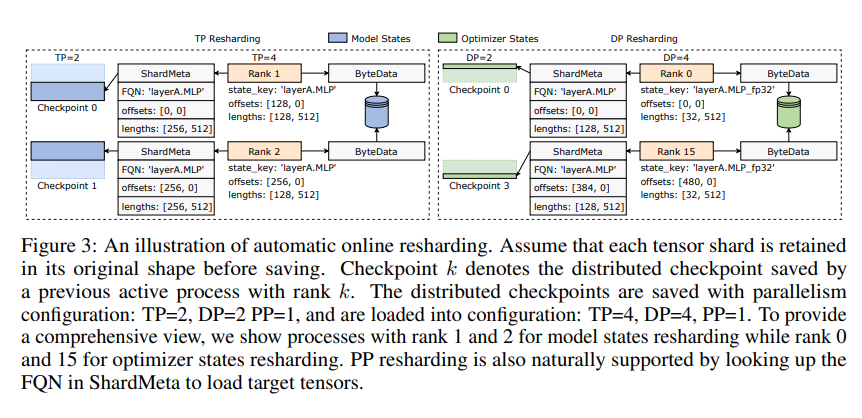

ByteCheckpoint's innovation lies in its novel storage architecture, which separates data and metadata, handling checkpoints more flexibly under different parallel configurations and training frameworks. Moreover, it supports automatic online checkpoint resharding, dynamically adjusting checkpoints to adapt to different hardware environments without interrupting training.

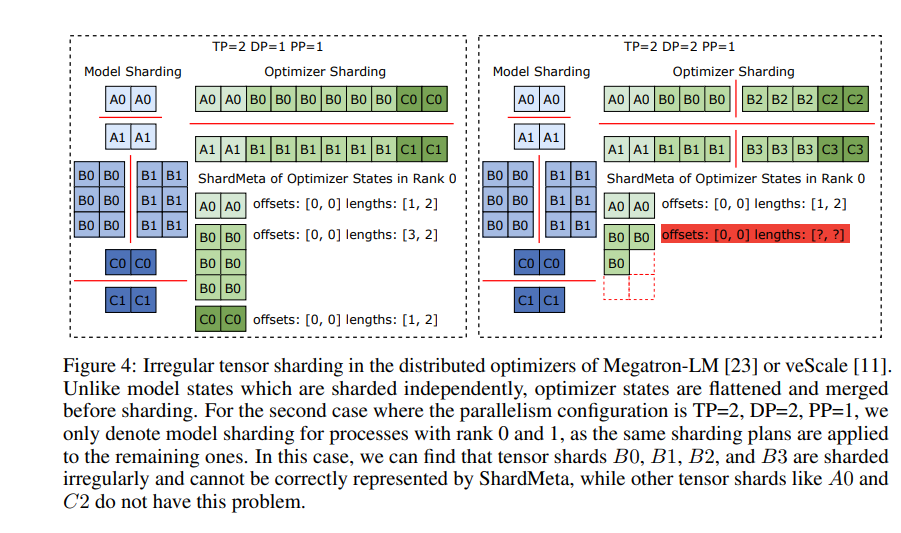

ByteCheckpoint also introduces a key technology—asynchronous tensor merging. This efficiently handles unevenly distributed tensors across different GPUs, ensuring the model's integrity and consistency during checkpoint resharding.

To enhance checkpoint saving and loading speeds, ByteCheckpoint integrates a series of I/O performance optimizations, such as fine-grained save/load pipelines, Ping-Pong memory pools, workload-balanced saving, and zero-redundancy loading, significantly reducing waiting times during training.

Experiments show that compared to traditional methods, ByteCheckpoint improves checkpoint saving and loading speeds by tens to hundreds of times, significantly enhancing the training efficiency of large language models.

ByteCheckpoint is not just a checkpoint system but a powerful assistant in the training process of large language models, a key to more efficient and stable AI training.

Paper link: https://arxiv.org/pdf/2407.20143