Recently, Alibaba Cloud has launched the Qwen2-Math series of large language models, a new AI talent focused on the field of mathematics that has garnered widespread attention in the industry since its debut.

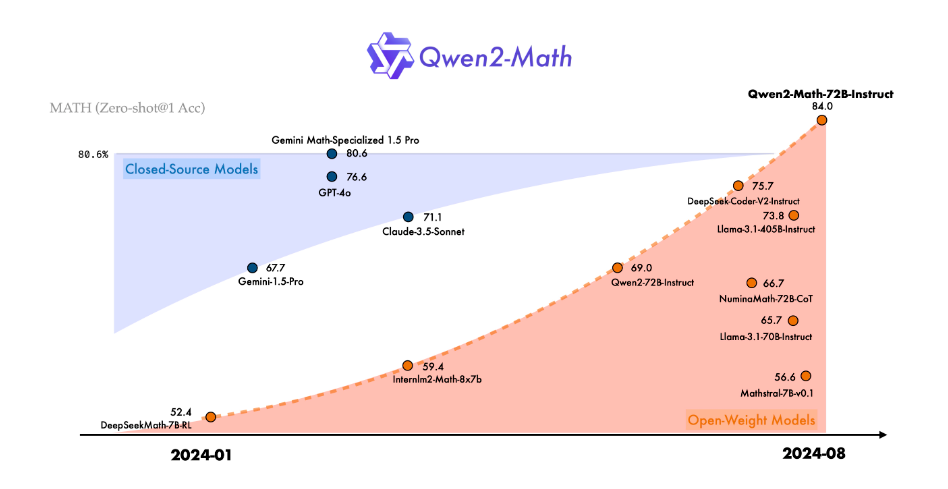

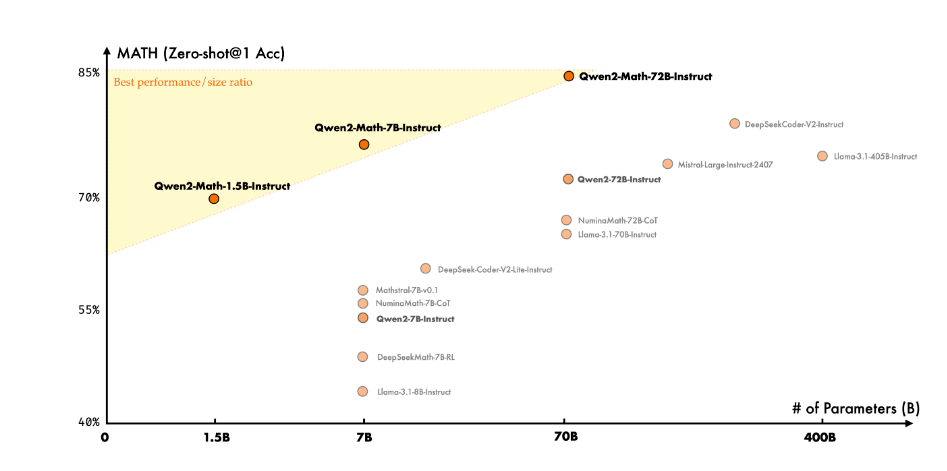

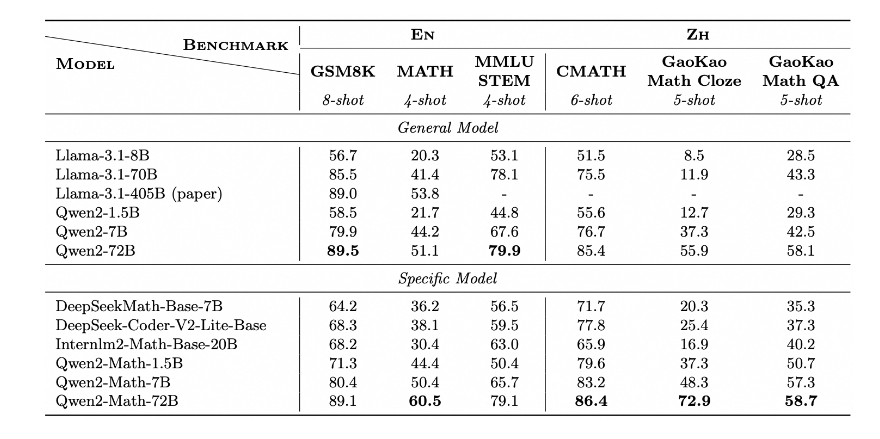

As the latest addition to the Qwen2 series, the Qwen2-Math and Qwen2-Math-Instruct-1.5B/7B/72B models have demonstrated remarkable prowess in mathematical problem-solving. It is reported that these models have not only surpassed existing open-source models in multiple mathematical benchmark tests but have also outperformed well-known closed-source models including GPT-4o, Claude-3.5-Sonnet, Gemini-1.5-Pro, and Llama-3.1-405B in certain aspects, earning the title of an "underdog" in the AI mathematics field.

The success of Qwen2-Math is no accident. The Alibaba Cloud team has dedicated significant effort over the past year to enhance the reasoning capabilities of large language models in arithmetic and mathematical problems. The foundation of this series of models is Qwen2-1.5B/7B/72B, on which the development team conducted deep pre-training using a meticulously designed mathematical corpus. This unique corpus includes large-scale high-quality mathematical web texts, professional books, code examples, and a vast array of exam questions, even including mathematical pre-training data generated autonomously by Qwen2.

Particularly noteworthy is the Qwen2-Math-Instruct model. This mathematics-specialized reward model, based on Qwen2-Math-72B, employs an innovative training method. The development team ingeniously combined dense reward signals with binary signals indicating the correctness of model responses, using this combined signal as a supervisory signal to construct SFT (Supervised Fine-Tuning) data through rejection sampling, and applied Group Relative Policy Optimization (GRPO) technology in the subsequent reinforcement learning. This unique training method has significantly enhanced the model's mathematical problem-solving abilities.

In practical applications, Qwen2-Math-Instruct has shown astonishing performance. Whether in the 2024 AIME (American Invitational Mathematics Examination) or the 2023 AMC (American Mathematics Competitions), the model has excelled in various settings, including greedy search, majority voting, and risk minimization strategies.

More excitingly, Qwen2-Math has also demonstrated commendable strength in solving some International Mathematical Olympiad (IMO) level problems. Through the analysis of a series of test cases, researchers found that Qwen2-Math not only handles simple math competition questions with ease but also provides convincing solution approaches when faced with complex problems.

However, the Alibaba Cloud team is not stopping here. They revealed that the current Qwen2-Math series only supports English, but they are actively developing bilingual models that support both English and Chinese, with plans to launch multi-language versions in the near future. Additionally, the team is continuously optimizing the model to further enhance its ability to solve more complex and challenging mathematical problems.

The emergence of Qwen2-Math undoubtedly opens up new possibilities for AI applications in the field of mathematics. It will not only bring revolutionary changes to the education industry, helping students better understand and master mathematical knowledge, but may also play a significant role in fields requiring complex mathematical calculations such as research and engineering.

Project page: https://qwenlm.github.io/blog/qwen2-math/

Model download: https://huggingface.co/Qwen