Recently, renowned AI researcher Andrej Karpathy stirred up a contentious debate when he suggested that Reinforcement Learning from Human Feedback (RLHF), currently highly regarded, might not be the inevitable path to truly human-level problem-solving capabilities. This statement undoubtedly dropped a heavy bomb into the current AI research field.

RLHF was once considered the key to the success of large language models like ChatGPT, hailed as the "secret weapon" that endowed AI with understanding, compliance, and natural interaction abilities. In the traditional AI training process, RLHF typically serves as the final step after pre-training and supervised fine-tuning (SFT). However, Karpathy likened RLHF to a "bottleneck" and a "stopgap measure," arguing that it is far from the ultimate solution for AI evolution.

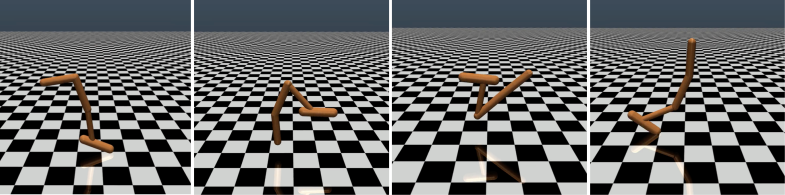

Karpathy skillfully compared RLHF to DeepMind's AlphaGo. AlphaGo employed what he called "true RL" (reinforcement learning) technology, which, by constantly playing against itself and maximizing the win rate, eventually surpassed top human players without human intervention. This method achieved superhuman performance levels by optimizing neural networks directly from game outcomes.

In contrast, Karpathy believes that RLHF is more about mimicking human preferences rather than genuinely solving problems. He envisioned that if AlphaGo used RLHF, human evaluators would need to compare a large number of game states and choose preferences, a process that might require up to 100,000 comparisons to train a "reward model" that mimics human "atmosphere checks." However, this "atmosphere"-based judgment in a rigorous game like Go could lead to misleading results.

Similarly, the reward model of current LLMs works in a similar way—it tends to rank highly answers that human evaluators statistically seem to prefer. This is more of a proxy for superficial human preferences rather than a true manifestation of problem-solving abilities. More concerning is that the model might quickly learn how to exploit this reward function rather than genuinely improving its capabilities.

Karpathy pointed out that while reinforcement learning performs well in closed environments like Go, it remains challenging for open-ended language tasks. This is mainly because it is difficult to define clear objectives and reward mechanisms in open tasks. "How do you give an objective reward for summarizing an article, answering a vague question about pip installation, telling a joke, or rewriting Java code into Python?" Karpathy posed this insightful question, adding, "Moving in this direction is not impossible in principle, but it is certainly not easy; it requires some creative thinking."

Nevertheless, Karpathy still believes that if this challenge can be overcome, language models could truly match or even surpass human problem-solving abilities. This view aligns with a recent paper by Google DeepMind, which points out that openness is the foundation of Artificial General Intelligence (AGI).

As one of the several senior AI experts who left OpenAI this year, Karpathy has been busy with his educational AI startup. His remarks undoubtedly inject new dimensions of thought into the AI research field and provide valuable insights into the future direction of AI development.

Karpathy's views have sparked extensive discussions within the industry. Supporters believe he has revealed a critical issue in current AI research: how to make AI truly capable of solving complex problems, rather than merely mimicking human behavior. Opponents worry that prematurely abandoning RLHF could lead to a deviation in the direction of AI development.

Paper link: https://arxiv.org/pdf/1706.03741