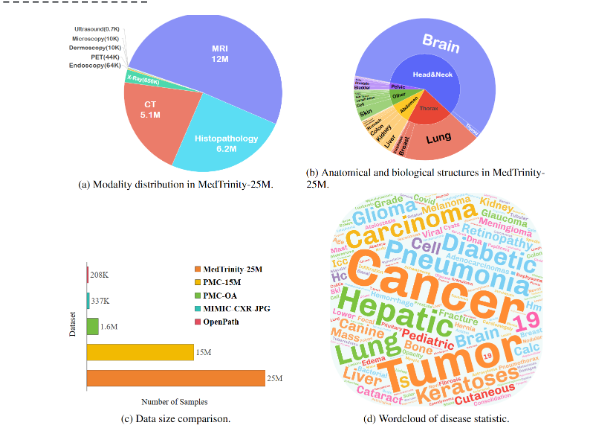

The "MedTrinity-25M" large-scale multimodal dataset from the UCSC-VLAA team has been officially released. This dataset includes 25 million medical images with detailed annotations, marking a significant innovation in the medical field. It features multi-granularity annotations that assist researchers in better understanding and applying medical data for training multimodal large models.

The construction of MedTrinity-25M was quite complex. The team meticulously processed the data, extracted key information from various sources, integrated metadata, generated rough titles, located regions of interest, and gathered relevant medical knowledge. More interestingly, they utilized this information to generate detailed descriptions using large-scale language models (MLLM). This approach not only enhanced data usability but also opened new avenues for medical research.

Regarding the release process, it's worth noting that the Demo dataset of MedTrinity-25M was already online as early as June 2024, and the complete dataset was officially released to the public on July 21. They also published a related paper on August 7.

In addition to the dataset itself, the team provides a series of pre-trained models, such as LLaVA-Med++, which perform exceptionally well on multiple medical tasks. Researchers can utilize these tools to more effectively complete their projects, significantly enhancing the efficiency of medical research.

MedTrinity-25M offers a valuable resource to the medical community, and we hope that everyone will make full use of this dataset to drive the advancement of medical research.

Project Entry: https://top.aibase.com/tool/medtrinity-25m