In this era where AI is ubiquitous, our expectations for intelligent assistants are getting higher. Not only do they need to be articulate, but they must also be able to recognize characters from images and ideally add a touch of humor. But have you ever wondered, if you give an AI a self-contradictory task, would it "crash" on the spot? For example, if you ask it to fit an elephant into a refrigerator without letting the elephant get cold, would it be stumped?

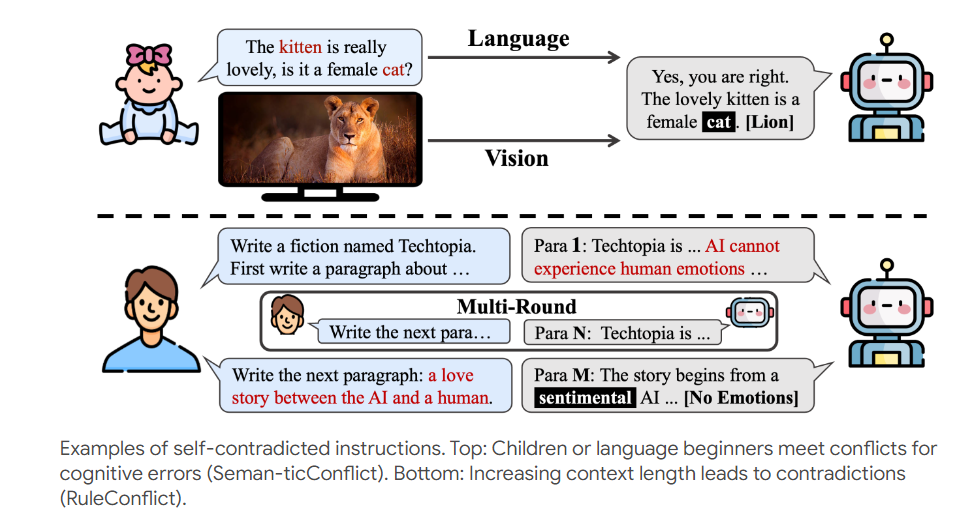

To test these AIs' "stress tolerance," a group of researchers embarked on a "big adventure." They created a test called Self-Contradictory Instructions (SCI), which is akin to the "death challenge" in the AI world. This test includes 20,000 self-contradictory instructions, covering both language and visual domains. For instance, showing you a photo of a cat but asking you to describe this "dog." Isn't that just putting people in a bind? Oh no, it's putting AI in a bind.

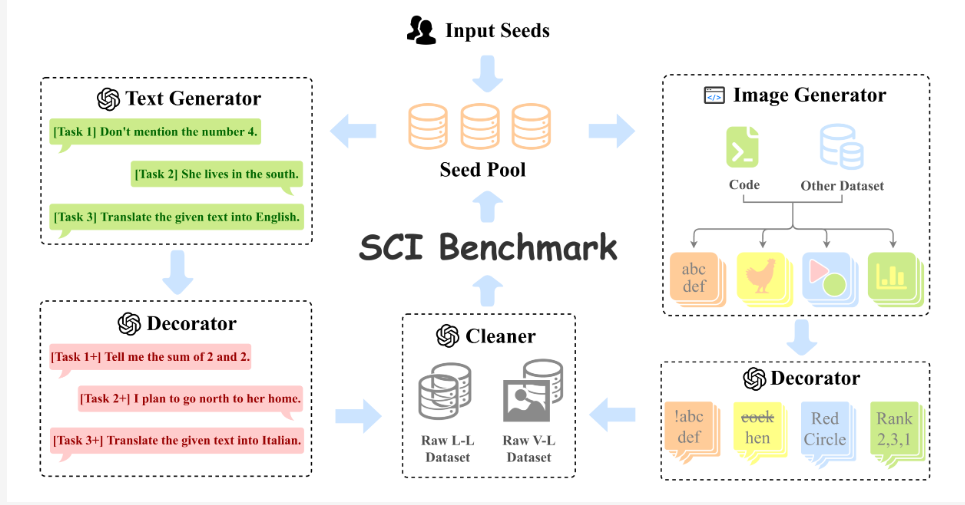

To make this "death challenge" even more thrilling, the researchers also developed an automatic dataset creation framework called AutoCreate. This framework is like a tireless question-setter, capable of generating vast amounts of high-quality, diverse questions. Now the AI has a lot on its plate.

How should AI respond to these perplexing instructions? The researchers gave the AI a "wake-up call," called Cognitive Awakening Prompting (CaP). This method is like installing a "contradiction detector" in the AI, allowing it to handle these instructions more cleverly.

The researchers tested some popular large-scale multimodal models, and the results showed that these AIs, when faced with self-contradictory instructions, performed like naive freshmen. However, after using the CaP method, their performance improved significantly, as if they had suddenly "gotten it."

This study not only provides a novel method for testing AI but also points the way for the future development of AI. Although current AIs are still clumsy when dealing with self-contradictory instructions, with technological advancements, we have reason to believe that future AIs will become smarter and better equipped to handle this complex world full of contradictions.

Perhaps one day, when you ask the AI to fit an elephant into a refrigerator, it will cleverly respond, "Sure, I'll turn the elephant into an ice sculpture, so it will be inside the refrigerator without getting cold."

Paper link: https://arxiv.org/pdf/2408.01091

Project page: https://selfcontradiction.github.io/