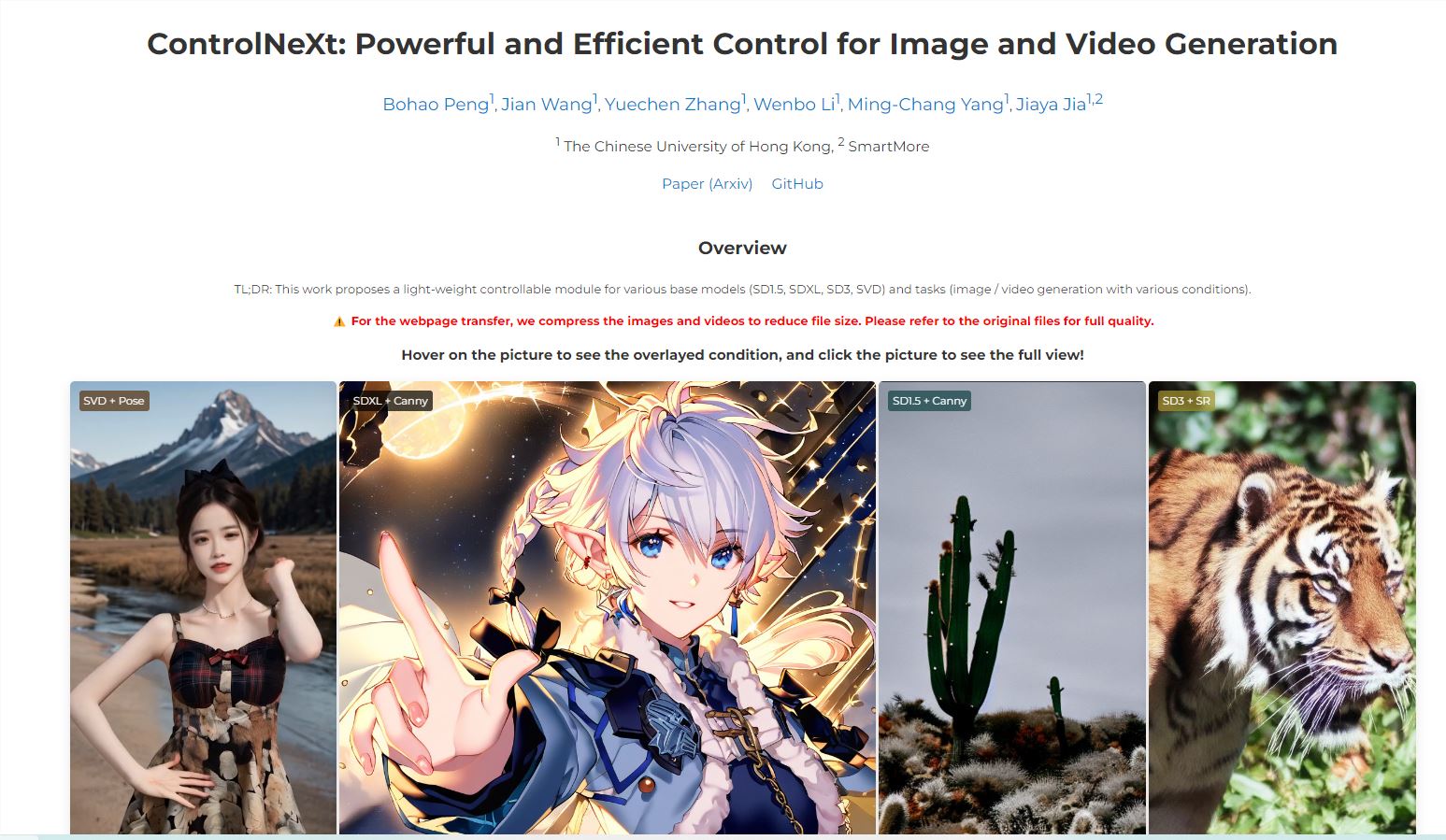

The latest release from the team of Professor Jia JiaYa at CUHK, ControlNeXt, is nothing short of a "weight-loss miracle" in the AI world! This open-source image/video generation guidance tool is not only compact in size, perfectly compatible with common models from the Stable Diffusion family such as SDXL, SD1.5, etc., but also plug-and-play, significantly simplifying the usage process.

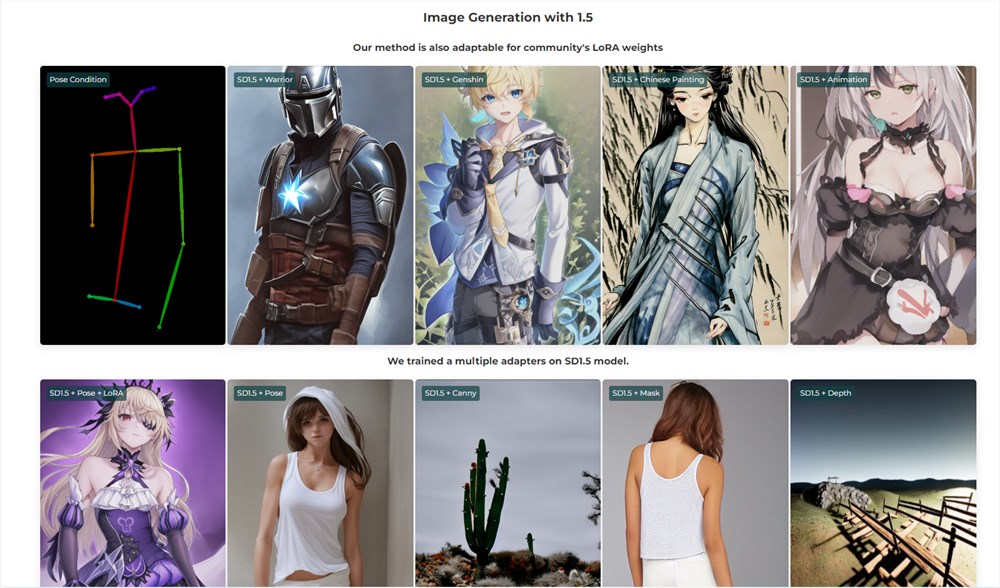

ControlNeXt supports a variety of control modes, including edge guidance, pose control, masking, and depth of field control. It can even make Iron Man perform a beautiful dance with precise movements down to the fingers, showcasing its powerful control capabilities.

The "weight-loss secret" of ControlNeXt lies in its clever removal of the "glutton" control branches from ControlNet, replacing them with a "light meal" consisting of a few ResNet blocks. This compact module, although only one-tenth the size of the original, can perfectly extract features of various control conditions.

Moreover, ControlNeXt is a "learning prodigy." It can master new skills in just 400 steps, whereas ControlNet requires several thousand steps. In terms of generation speed, ControlNeXt is unrivaled, only adding a 10.4% delay, compared to the 41.9% delay of ControlNet.

Another "signature move" of ControlNeXt is cross-normalization. This technique is like throwing a "networking party" for the features, aligning their data distributions to avoid sensitivity to parameter initialization, and allowing control conditions to take effect early in the training process.

ControlNeXt is like the "Transformer" in the AI world, compact and flexible yet powerful. It can not only make two-dimensional girls perfectly align with control lines but also create characters from different dimensions with unique styles. With this miraculous tool, we can soon expect to see more astonishing AI art works!

Project Homepage: https://pbihao.github.io/projects/controlnext/index.html