Cosine, an AI startup based in San Francisco, has introduced a new AI model called Genie, designed specifically to assist software developers. According to the company, Genie outperforms its competitors in benchmark tests, demonstrating exceptional capabilities.

Cosine collaborated with OpenAI to train a variant of GPT-4o using high-quality data, achieving remarkable benchmark test results. The company states that the key to Genie's success lies in its ability to "mimic human reasoning," which may extend beyond the realm of software development.

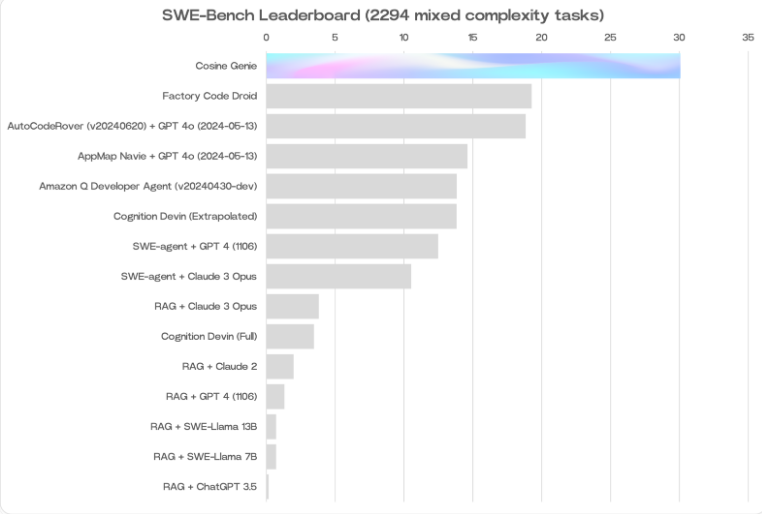

Genie Leads in the SWE Domain

Alistair Pullen, co-founder and CEO of Cosine, revealed that Genie scored 30% on the SWE-Bench test, the highest score ever achieved by an AI model in this field. This score surpasses other language models focused on coding, such as Amazon's model (19%) and Cognition's Devin (13.8% in certain SWE-Bench tests).

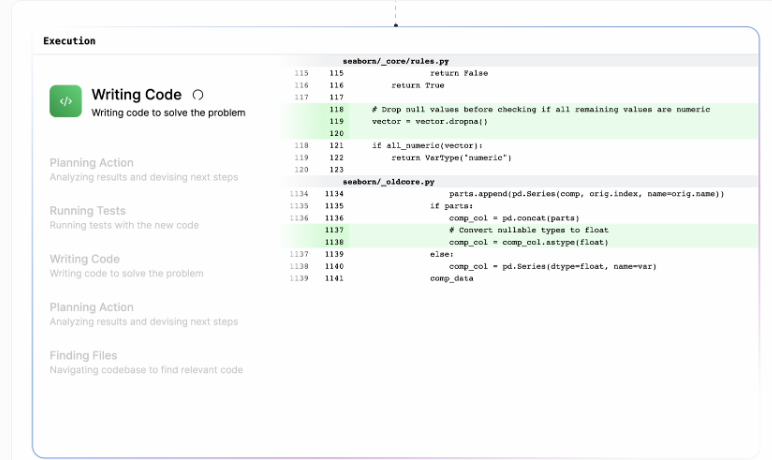

Genie's architecture is designed to mimic the cognitive processes of human developers, enabling it to autonomously or collaboratively fix errors, develop new features, refactor code, and perform various programming tasks.

Self-Improvement Through Synthetic Data

The development of Genie involved a proprietary process, training and fine-tuning a non-public variant of GPT-40 using billions of high-quality data. With the help of experienced developers, Cosine spent nearly a year curating this dataset, which includes 21% JavaScript and Python, 14% TypeScript and TSX, and 3% other languages (including Java, C++, and Ruby).

Genie's outstanding performance is partly due to its self-improvement training. Initially, the model primarily learned from perfect, effective code but struggled with handling its own errors. Cosine addressed this issue by using synthetic data: if Genie's initial solution was incorrect, the model was shown how to improve through the correct result. With each iteration, Genie's solutions gradually improved, requiring fewer corrections.

Overcoming Technical Limitations

Pullen recognized the potential of large language models to support human software development as early as 2022. However, the technology at the time was not advanced enough to realize the vision for Genie. The token capacity of the context window was typically limited to 4000 tokens, a major bottleneck. Today, models like Gemini1.5Pro can process up to 2 million tokens in a single prompt. Although Cosine has not disclosed Genie's specific token capacity, this technical advancement undoubtedly provides a solid foundation for Genie's success.