In this era of information explosion, we document and share our lives with photos and videos every day. But have you ever wondered, what if there was a technology that enabled machines to understand these images and videos as humans do, and even engage in profound conversations with us?

The latest general-purpose multimodal large model, mPLUG-Owl3, released by the Alibaba team, showcases astonishing efficiency and comprehension capabilities, allowing us to "watch" a 2-hour movie in just 4 seconds! This isn't just a model; it's more like an AI assistant that can see, hear, speak, and think.

mPLUG-Owl3, sounding like a wise and alert owl wearing glasses, excels in understanding long sequences of images. Whether it's a series of photos or a video, it can grasp the content and even the storyline within.

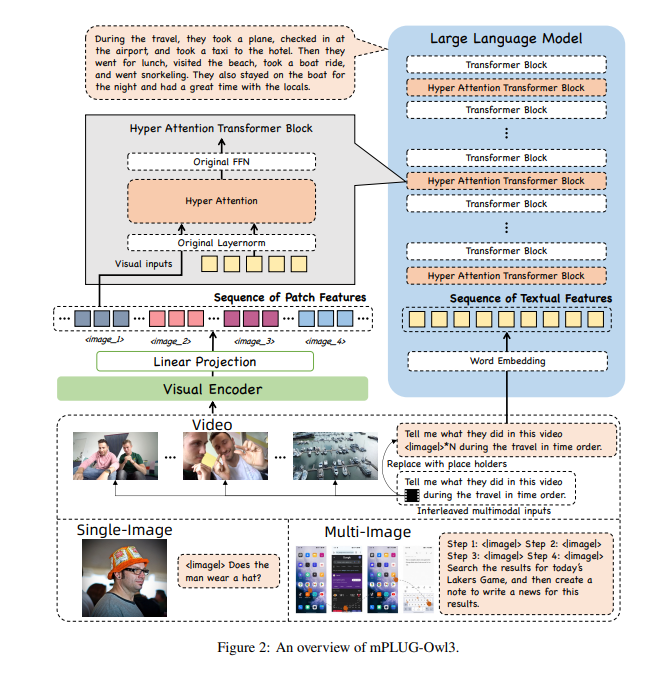

To handle such vast amounts of information, researchers have equipped mPLUG-Owl3 with a super brain—a hyper-attention module. This module acts as the AI's super brain, capable of simultaneously processing visual and linguistic information, enabling the AI to understand images and related textual information concurrently.

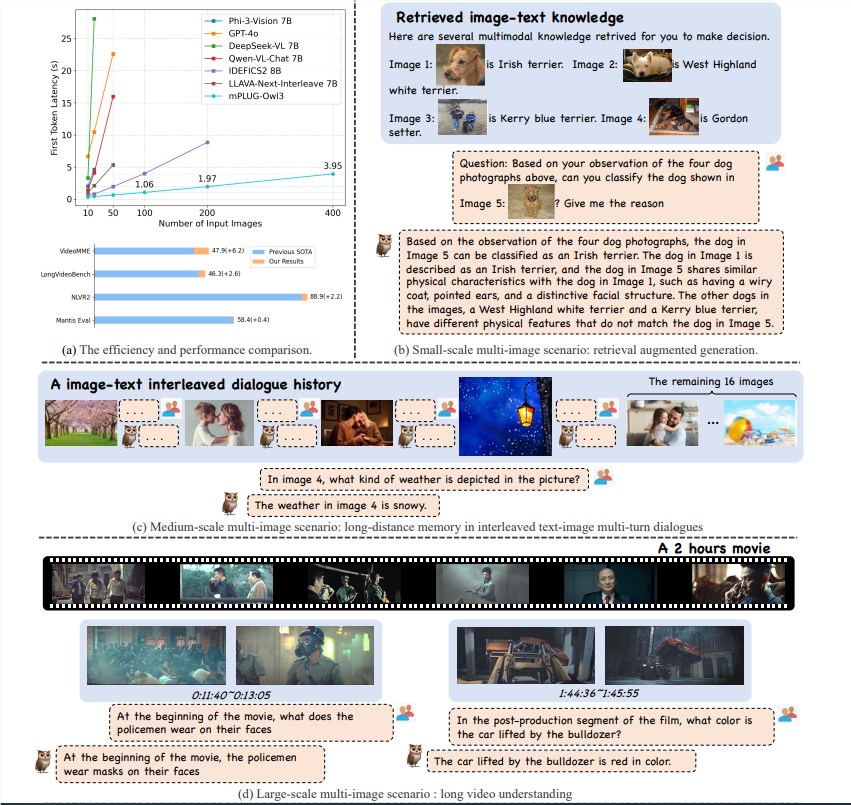

The mPLUG-Owl3 model has made significant breakthroughs in multimodal understanding with its outstanding inference efficiency. It not only reaches the state of the art (SOTA) in various benchmarks across single image, multiple images, and video scenarios but also reduces First Token Latency by six times, and can process eight times more images, up to 400, with a single A100 GPU.

mPLUG-Owl3 accurately understands incoming multimodal knowledge and uses it to answer questions. It can even tell you which piece of knowledge it used for its judgment and the detailed reasoning behind it.

mPLUG-Owl3 can correctly understand the content relationships in different materials and conduct in-depth reasoning. Whether it's stylistic differences or character recognition, it handles them with ease.

mPLUG-Owl3 can watch and understand a 2-hour video, starting to answer user questions in just 4 seconds, regardless of which part of the video the questions pertain to.

mPLUG-Owl3 employs a lightweight Hyper Attention module, extending the Transformer Block into a new module capable of interactive image-text feature processing and text modeling. This design significantly reduces the additional new parameters introduced, making the model easier to train and improving both training and inference efficiency.

Experiments on extensive datasets show that mPLUG-Owl3 achieves SOTA results on most single-image multimodal benchmarks. In multi-image evaluations, it surpasses models specifically optimized for multi-image scenarios. On LongVideoBench, it outperforms existing models, demonstrating its exceptional capability in long video understanding.

The release of Alibaba's mPLUG-Owl3 is not only a leap forward in technology but also opens up new possibilities for the application of multimodal large models. As the technology continues to improve, we look forward to mPLUG-Owl3 bringing more surprises in the future.

Paper: https://arxiv.org/pdf/2408.04840

Code: https://github.com/X-PLUG/mPLUG-Owl/tree/main/mPLUG-Owl3

Live Demo: https://huggingface.co/spaces/mPLUG/mPLUG-Owl3