Microsoft has announced the release of three new Phi-3.5 models, further solidifying its leading position in multilingual and multimodal artificial intelligence development. These new models are: Phi-3.5-mini-instruct, -3.5-MoE-instruct, and Phi-3.5-vision-instruct, each tailored for different application scenarios.

The Phi-3.5Mini Instruct model is a lightweight AI model with 380 million parameters, making it ideal for environments with limited computational capabilities. It supports a context length of 128k and is specifically optimized for instruction execution, suitable for tasks such as code generation, mathematical problem-solving, and logical reasoning. Despite its compact size, this model has shown remarkable competitiveness in multilingual and multi-turn dialogue tasks, outperforming other models in its class.

Entry: https://huggingface.co/microsoft/Phi-3.5-mini-instruct

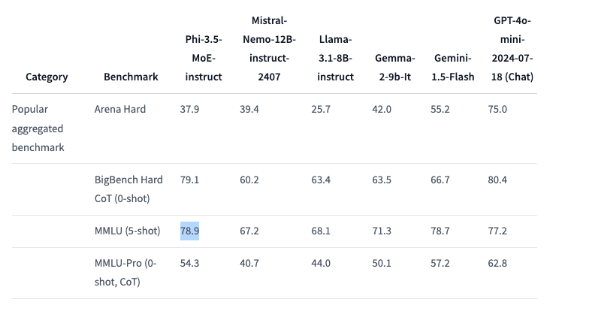

The Phi-3.5MoE model is a "Mixture of Experts" model, combining various types of models, each specialized for specific tasks. It has 41.9 billion parameters and supports a context length of 128k, demonstrating powerful performance in various reasoning tasks. This model excels in code, mathematics, and multilingual understanding, even surpassing larger models in some benchmarks, such as outperforming OpenAI's GPT-4o mini on MMLU (Massive Multitask Language Understanding).

Entry: https://huggingface.co/microsoft/Phi-3.5-MoE-instruct

The Phi-3.5Vision Instruct model is an advanced multimodal AI model that integrates text and image processing capabilities, suitable for tasks such as image understanding, optical character recognition, chart and table analysis, and video summarization. This model also supports a context length of 128k and can handle complex multi-frame visual tasks.

Entry: https://huggingface.co/microsoft/Phi-3.5-vision-instruct

To train these three models, Microsoft conducted extensive data processing. The Mini Instruct model used 3.4 trillion tokens, trained for 10 days on 512 H100-80G GPUs; the Vision Instruct model used 500 billion tokens, trained for 6 days; and the MoE model was trained using 4.9 trillion tokens over 23 days.

It is worth noting that all three Phi-3.5 models are released under the MIT open-source license, allowing developers to freely use, modify, and distribute these software. This not only reflects Microsoft's support for the open-source community but also enables more developers to integrate cutting-edge AI capabilities into their applications.

Key Points:

🌟 Microsoft introduces three new AI models, each targeting lightweight inference, mixed experts, and multimodal tasks.

📊 The Phi-3.5MoE outperforms GPT-4o mini in benchmarks, demonstrating excellent performance.

📜 All three models are released under the MIT open-source license, allowing developers to freely use and modify them.