In an era where technology companies are racing to integrate artificial intelligence into devices, an increasing number of Small Language Models (SLM) have emerged, capable of operating on resource-constrained devices. Recently, Nvidia's research team introduced Llama-3.1-Minitron4B, a compressed version of the Llama3 model, utilizing cutting-edge model pruning and distillation techniques. This new model not only matches the performance of larger models but also competes with similarly sized small models, while being more efficient in both training and deployment.

Pruning and distillation are two key techniques for creating smaller, more efficient language models. Pruning involves removing unimportant parts of the model, including "deep pruning"—removing entire layers, and "width pruning"—removing specific elements such as neurons and attention heads. Model distillation, on the other hand, transfers knowledge and capabilities from a large model (the "teacher model") to a smaller, simpler "student model".

There are mainly two methods of distillation: the first is through "SGD training," where the student model learns from the teacher model's inputs and responses, and the second is "classical knowledge distillation," where the student model learns not only the results but also the internal activations of the teacher model.

In a previous study, Nvidia researchers successfully reduced the Nemotron15B model to an 800 million parameter model through pruning and distillation, and further refined it to a 400 million parameter model. This process not only improved performance by 16% on the renowned MMLU benchmark but also required 40 times less training data than training from scratch.

This time, Nvidia's team created a 400 million parameter model based on the Llama3.18B model using the same methods. Initially, they fine-tuned the unpruned 8B model on a dataset containing 94 billion tokens to address the distribution differences between the training data and the distillation dataset. Subsequently, they employed both deep pruning and width pruning methods, resulting in two different versions of Llama-3.1-Minitron4B.

The researchers fine-tuned the pruned models using NeMo-Aligner and evaluated their capabilities in instruction following, role-playing, retrieval-augmented generation (RAG), and function calling.

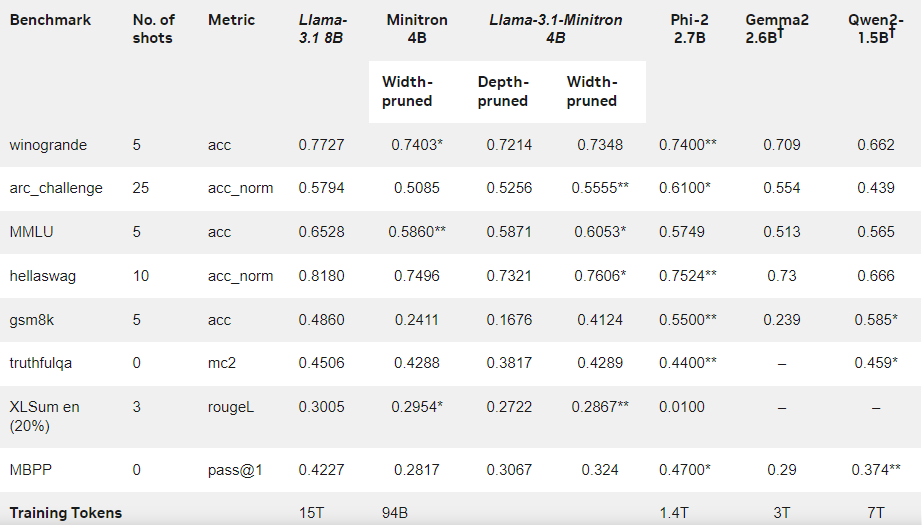

The results showed that despite the smaller amount of training data, Llama-3.1-Minitron4B performed comparably to other small models, demonstrating excellent performance. The width pruning version of the model has been released on Hugging Face, allowing commercial use, enabling more users and developers to benefit from its efficiency and outstanding performance.

Official Blog: https://developer.nvidia.com/blog/how-to-prune-and-distill-llama-3-1-8b-to-an-nvidia-llama-3-1-minitron-4b-model/

Key Points:

🌟 Llama-3.1-Minitron4B is Nvidia's small language model introduced based on pruning and distillation techniques, featuring efficient training and deployment capabilities.

📈 The model used 40 times less tokens in training compared to training from scratch, yet showed significant performance improvements.

🔓 The width pruning version has been released on Hugging Face, facilitating commercial use and development for users.