Yunzhisheng, a renowned enterprise in the field of artificial intelligence in China, announced on August 23, 2024, in Beijing, the launch of its latest research achievement—the Shanhai Multimodal Large Model.

The Shanhai Multimodal Large Model is part of Yunzhisheng's Atlas AI infrastructure, capable of receiving and processing inputs from various modalities such as text, audio, and images, and generating real-time outputs in any combination of these formats. This capability enables the Shanhai model to not only facilitate efficient voice interactions but also provide a smooth experience close to natural human conversation.

The model boasts a high level of intelligent voice interaction, capable of responding to user commands in real-time, supporting interruptions in conversations, and expressing emotions to form an emotional resonance with users. Additionally, the Shanhai model can freely switch voice tones to create a personalized sound according to the user's specific needs.

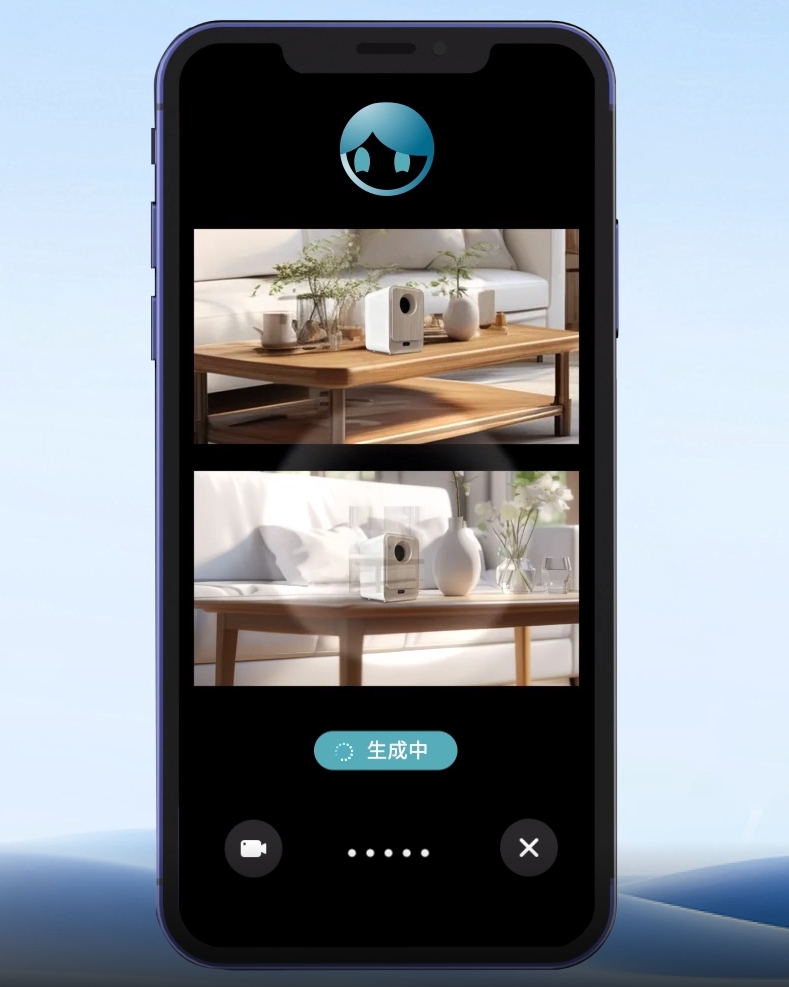

In terms of visual interaction, the Shanhai model can understand and describe the surrounding environment through a camera, achieving precise object recognition and scene analysis. It can also quickly create visual content based on user instructions, providing a personalized visual experience.

Yunzhisheng stated that the launch of the Shanhai Multimodal Large Model marks a new core for its technology platform, Yunzhi Brain, and will offer more abundant and efficient products and solutions for the smart living and smart healthcare sectors. Since its release in May 2023, the Shanhai Large Model has achieved outstanding results in multiple authoritative competitions, demonstrating its comprehensive general capabilities and exceptional professional skills.

Experience Address:https://shanhai.unisound.com/