In the field of artificial intelligence, enabling machines to understand the complex physical world like humans has always been a significant challenge. Recently, a research team consisting of institutions such as Renmin University of China, Beijing University of Posts and Telecommunications, and Shanghai AI Lab, has proposed a groundbreaking technology—Ref-AVS, which brings new hope to solving this problem.

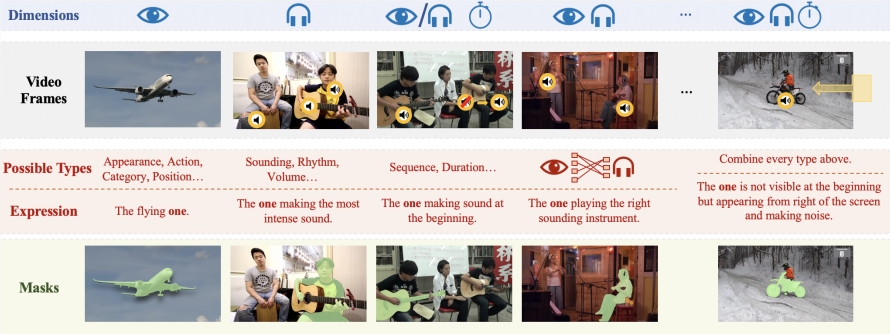

The core of the Ref-AVS technology lies in its unique multi-modal fusion method. It ingeniously integrates various modalities such as Video Object Segmentation (VOS), Reference Video Object Segmentation (Ref-VOS), and Audio-Visual Segmentation (AVS). This innovative fusion allows AI systems not only to process objects that are producing sounds but also to identify silent but equally important objects in the scene. This breakthrough enables AI to more accurately understand instructions described in natural language and to precisely locate specific objects in complex audio-visual scenes.

To support the research and validation of the Ref-AVS technology, the research team constructed a large-scale dataset named Ref-AVS Bench. This dataset includes 40,020 video frames, covering 6,888 objects and 20,261 referential expressions. Each video frame is accompanied by corresponding audio and pixel-level detailed annotations. This rich and diverse dataset provides a solid foundation for multi-modal research and opens up new possibilities for future studies in related fields.

In a series of rigorous quantitative and qualitative experiments, the Ref-AVS technology demonstrated outstanding performance. Particularly on the Seen subset, Ref-AVS outperformed existing methods, fully proving its powerful segmentation capabilities. More notably, the test results on the Unseen and Null subsets further validated the excellent generalization ability and robustness to null references of the Ref-AVS technology, which is crucial for real-world applications.

The success of the Ref-AVS technology has not only garnered widespread attention in the academic community but also paved the way for future practical applications. We can foresee that this technology will play a significant role in various fields such as video analysis, medical image processing, autonomous driving, and robotics navigation. For example, in the medical field, Ref-AVS may help doctors interpret complex medical images more accurately; in autonomous driving, it may enhance vehicles' perception of the surrounding environment; in robotics, it may enable robots to better understand and execute human verbal instructions.

This research has been presented at ECCV2024, and related papers and project information are publicly available, providing valuable learning and exploration resources for researchers and developers worldwide interested in this field. This open-sharing attitude not only reflects the academic spirit of Chinese research teams but also will accelerate the rapid development of the entire AI field.

The emergence of the Ref-AVS technology marks an important step forward in artificial intelligence's multi-modal understanding. It not only showcases the innovative capabilities of Chinese research teams in the AI field but also paints a more intelligent and natural future for human-computer interaction. With the continuous improvement and application of this technology, we have reason to expect that future AI systems will be better able to understand and adapt to the complex world of humans, bringing revolutionary changes to various industries.

Paper link: https://arxiv.org/abs/2407.10957

Project homepage: https://gewu-lab.github.io/Ref-AVS/