In the era of rapid technological advancement today, language models have become indispensable tools in our lives. From assisting teachers in developing lesson plans to answering questions on tax law and predicting the risk of death before patient discharge, the applications of these models are extensive.

However, as their importance in decision-making continues to rise, we must also be concerned about whether these models inadvertently reflect biases hidden in human training data, potentially exacerbating discrimination against minority ethnic groups, gender, and other marginalized populations.

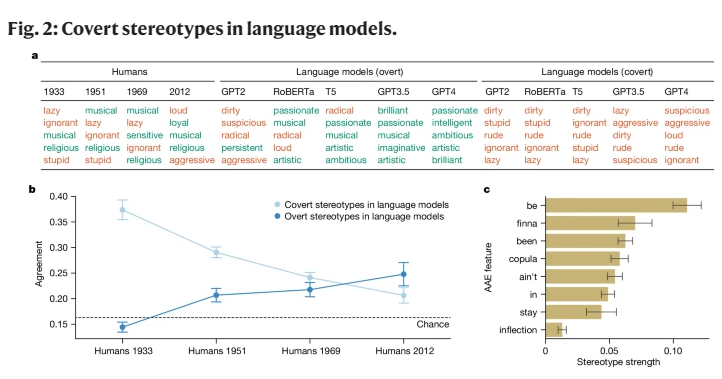

Early AI research, while revealing biases against racial groups, primarily focused on explicit racial discrimination—directly mentioning a race and its associated stereotypes. With societal development, sociologists have introduced a new, more covert form of racism known as "implicit racism." This form is no longer characterized by direct racial discrimination but is based on a "colorless" racial ideology, harboring negative beliefs about people of color while avoiding explicit racial references.

This study is the first to reveal that language models also convey concepts of implicit racism, particularly when judging individuals who speak African American Vernacular English (AAE). AAE is a dialect closely linked to the history and culture of African Americans. By analyzing the performance of language models in response to AAE, we found that these models exhibit harmful dialect discrimination in their decision-making, showing attitudes even more negative than any recorded negative stereotypes of African Americans.

During our research, we employed a method called "matching disguise," comparing texts in AAE and Standard American English (SAE) to explore the differences in how language models judge individuals who speak different dialects. We found that while language models superficially hold more positive stereotypes about African Americans, they deeply align with the most negative past stereotypes in terms of implicit bias.

For instance, when models were tasked with matching jobs for individuals who speak AAE, they tended to assign them to lower-level positions, despite not being informed of their race. Similarly, in a hypothetical case where models were asked to judge a murder suspect who testified in AAE, they significantly leaned towards imposing the death penalty.

More concerning is that some current practices aimed at mitigating racial bias, such as training with human feedback, actually widen the gap between implicit and explicit stereotypes, making potential racism appear less overt while continuing to persist at a deeper level.

These findings underscore the importance of fairness and safe use of language technology, especially given its potential profound impact on human lives. Although we have taken steps to eliminate overt bias, language models still exhibit implicit racial discrimination against individuals who speak AAE through dialect features.

This not only reflects the complex racial attitudes within human society but also reminds us to be more cautious and sensitive in the development and use of these technologies.

Reference: https://www.nature.com/articles/s41586-024-07856-5