A recent peer-reviewed study published in the journal Cureus has shown that OpenAI's GPT-4 language model successfully passed the Japanese National Physical Therapy Examination without any additional training.

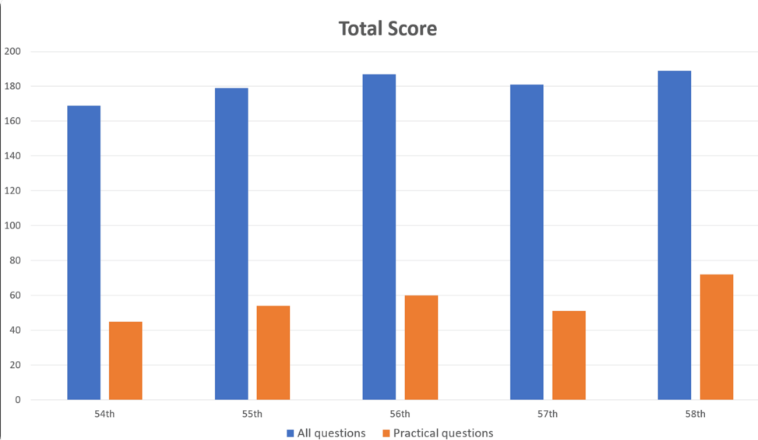

Researchers fed GPT-4 with 1,000 questions covering aspects such as memory, comprehension, application, analysis, and evaluation. The results indicated that GPT-4 answered 73.4% of the questions correctly overall, passing all five test sections. However, the study also revealed limitations in AI's capabilities in certain areas.

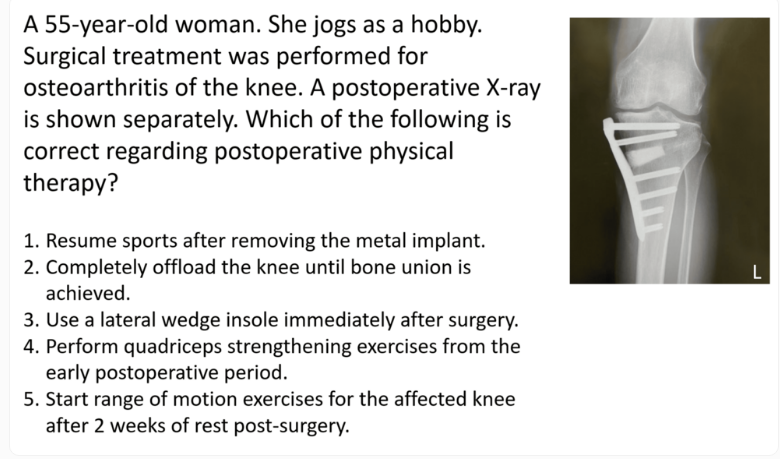

GPT-4 performed exceptionally well on general questions with an accuracy rate of 80.1%, but only 46.6% on practical questions. Similarly, it excelled in handling pure text questions (80.5% correct) compared to those with images and tables (35.4% correct). This finding aligns with previous studies that highlighted GPT-4's limitations in visual understanding.

It is noteworthy that the difficulty of questions and the length of text had little impact on GPT-4's performance. Despite being primarily trained on English data, the model also performed well with Japanese inputs.

The researchers noted that while this study demonstrates GPT-4's potential in clinical rehabilitation and medical education, it should be viewed with caution. They emphasized that GPT-4 cannot answer all questions correctly and future assessments are needed to evaluate new versions and the model's capabilities in written and reasoning tests.

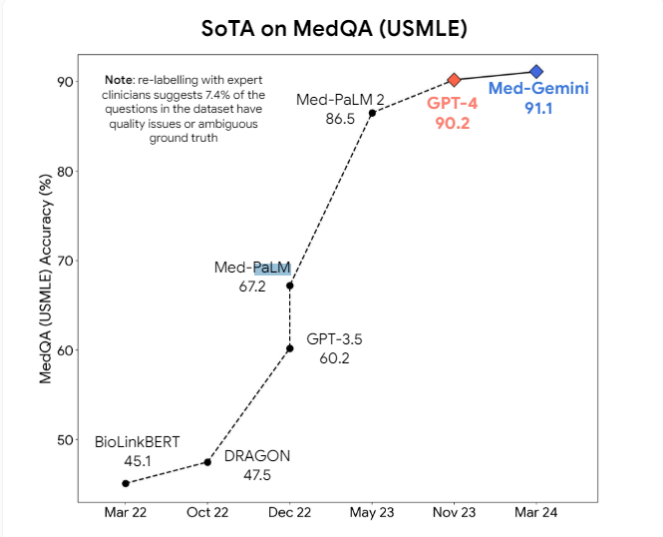

Additionally, the researchers proposed that multi-modal models like GPT-4v could bring further improvements in visual understanding. Currently, Google's Med-PaLM2, Med-Gemini, and Meta's medical models based on Llama3 are under active development, aiming to surpass general models in medical tasks.

However, experts believe that it may still take a long time for medical AI models to be widely applied in practice. The error margins of current models are still too large in medical environments, and significant progress in reasoning capabilities is needed before these models can be safely integrated into daily medical practice.