Recently, NVIDIA, in collaboration with research teams from Georgia Tech, UMD, and HKPU, has introduced a cutting-edge vision-language model — NVEagle. This model can interpret images and engage in conversation, functioning as a super assistant that can both see and speak.

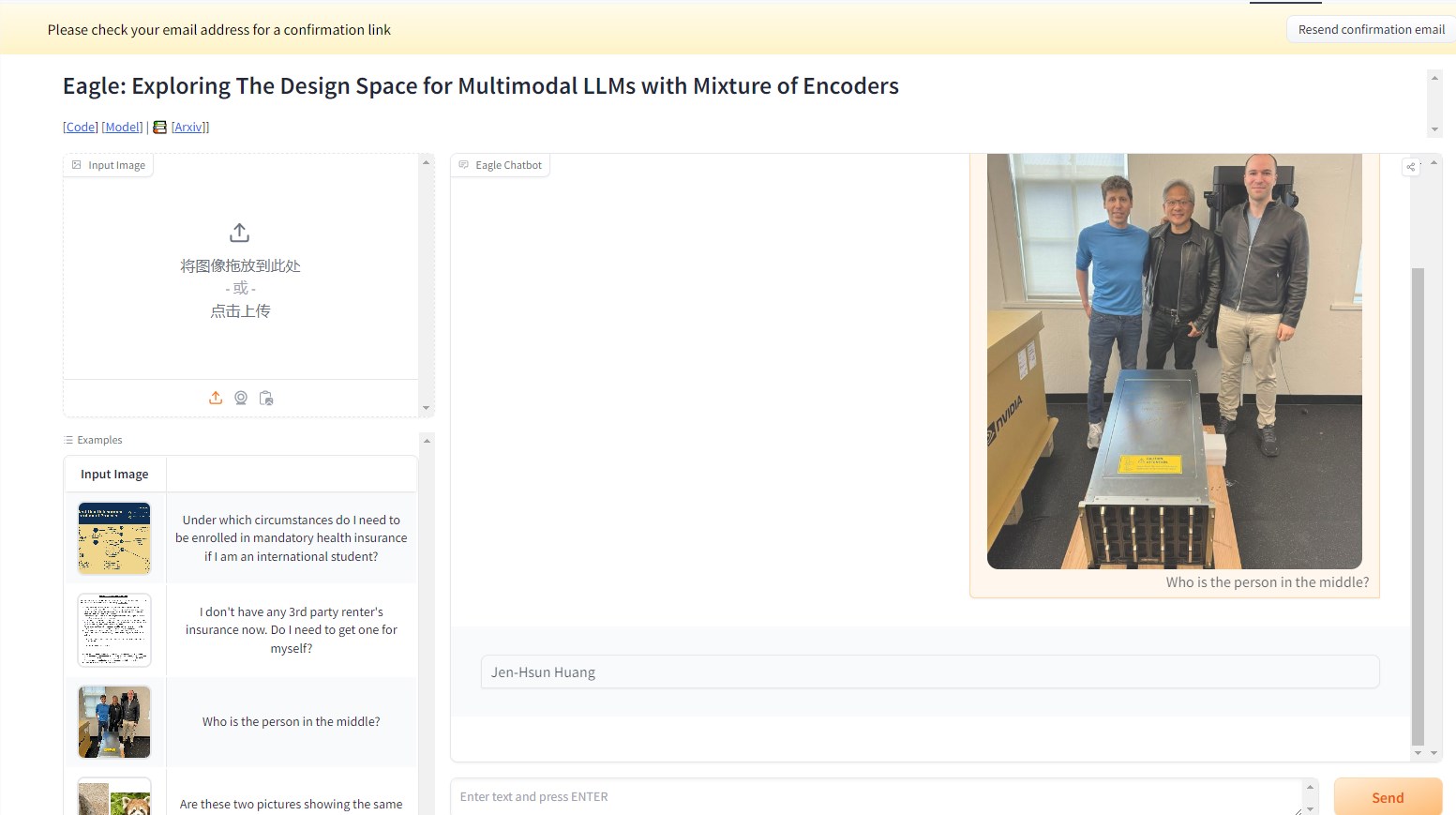

For instance, in the example below, asking the NVEagle model who the person in the image is results in the model interpreting the picture and providing the answer: Jensen Huang. Remarkably accurate.

This multimodal large language model (MLLM) has taken a significant step forward in integrating visual and linguistic information. NVEagle is capable of understanding complex real-world scenes, enhancing interpretation and responses through visual inputs. Its core design involves converting images into visual tokens, which are then combined with text embeddings, thereby improving the understanding of visual information.

However, building such a powerful model comes with numerous challenges, particularly in enhancing visual perception capabilities. Research shows that many existing models experience "hallucination" phenomena, producing inaccurate or meaningless outputs, especially when dealing with high-resolution images. This is particularly evident in tasks requiring detailed analysis, such as optical character recognition (OCR) and document understanding. To overcome these difficulties, the research team explored various methods, including testing different visual encoders and fusion strategies.

The launch of NVEagle represents the culmination of this research, encompassing three versions: Eagle-X5-7B, Eagle-X5-13B, and Eagle-X5-13B-Chat. The 7B and 13B versions are primarily used for general vision-language tasks, while the 13B-Chat version is fine-tuned specifically for conversational AI, enabling better interaction based on visual inputs.

A notable feature of NVEagle is its use of a Mixture of Experts (MoE) mechanism, which dynamically selects the most suitable visual encoder for different tasks, significantly enhancing the processing capability for complex visual information. The model has been released on Hugging Face, making it accessible for researchers and developers.

NVEagle has performed exceptionally well in various benchmark tests. For example, in OCR tasks, the Eagle model achieved an average score of 85.9 on OCRBench, surpassing other leading models like InternVL and LLaVA-HR. In the TextVQA test, it scored 88.8, and in complex visual question-answering tasks, it scored 65.7 on the GQA test. Additionally, the model's performance continues to improve with the addition of extra visual experts.

Through systematic design exploration and optimization, the NVEagle series of models have successfully addressed several key challenges in visual perception, paving the way for the development of vision-language models.

Demo: https://huggingface.co/spaces/NVEagle/Eagle-X5-13B-Chat

Key Points:

🌟 NVEagle is NVIDIA's new-generation vision-language model aimed at enhancing understanding of complex visual information.

📈 The model includes three versions, each suited for different tasks, with the 13B-Chat version focusing on conversational AI.

🏆 NVEagle outperforms many leading models in multiple benchmark tests, demonstrating outstanding performance.