Recently, Google has been testing a new feature called "Ask Photos," designed to help users explore their photo libraries in innovative ways.

This feature was first teased in May, and is now being rolled out gradually to some users in the United States through Google Labs.

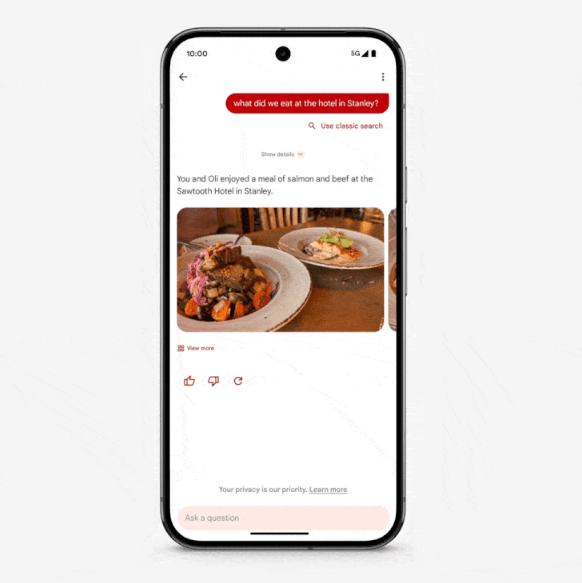

Users can simply ask "Ask Photos" questions like "Where did we camp last time we went to Yosemite?" or "What did we eat at the Stanley Hotel?" to get answers, which is both convenient and intelligent.

Behind this feature is Google's Gemini AI model. By analyzing the content of users' photos, the Photos app not only provides answers but also retrieves relevant images, allowing you to easily find those precious memories.

Additionally, Google has stated that you can use "Ask Photos" to complete tasks such as summarizing your recent vacation experiences or selecting the best family photos to create a shared album.

To better utilize "Ask Photos," Google is also upgrading traditional search functions. Users can perform searches using natural language, such as "Me and Alice laughing" or "Kayaking by the lake." Search results can also be sorted by date or relevance. This feature is currently available in English on Android and iOS platforms, with support for more languages coming in the next few weeks.

In this update, Google Photos has replaced the original "Library" tab with a new "Collection" page, aiming to make it easier for users to find their photos and videos.

The new feature supports natural language search, which will help users quickly find specific photos among thousands without having to sift through or filter by location.

Key Points:

📸 The new feature "Ask Photos" helps users query and organize photos in new ways.

🤖 Utilizing Google's Gemini AI model, users can receive relevant photos and answers to their questions.

🌐 Traditional search functions are upgraded to support natural language searches and more intelligent result sorting.