The recently released open-source AI model Reflection70B has faced widespread skepticism from the industry shortly after its debut.

This model, launched by New York-based startup HyperWrite, claims to be a variant of Meta's Llama3.1 and garnered attention for its impressive performance in third-party tests. However, as some test results were disclosed, Reflection70B's reputation began to be challenged.

The situation arose when Matt Shumer, co-founder and CEO of HyperWrite, announced Reflection70B on social media platform X on September 6, confidently calling it "the world's strongest open-source model."

Shumer also shared the model's "reflection tuning" technology, claiming that this method allows the model to self-audit before generating content, thereby enhancing accuracy.

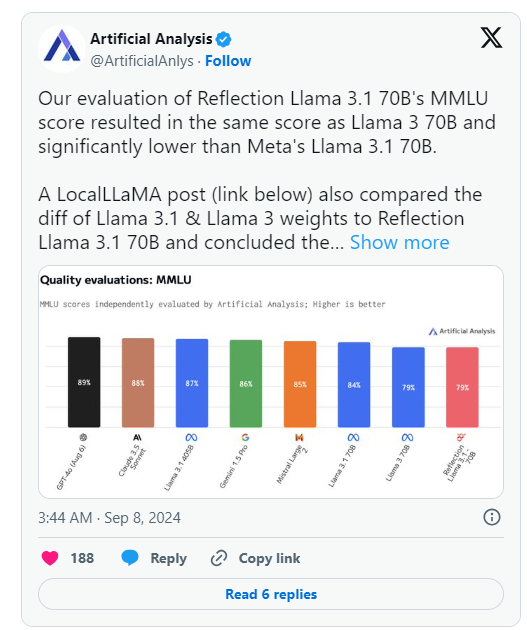

However, the day after HyperWrite's announcement, the organization Artificial Analysis, which specializes in "independent analysis of AI models and hosting providers," posted their analysis on X, stating that their evaluated Reflection Llama3.170B's MMLU (Massive Multitask Language Understanding) score was identical to Llama370B but significantly lower than Meta's Llama3.170B, which is a major discrepancy from the initial results published by HyperWrite/Shumer.

Shumer subsequently explained that there was an issue with the weights (or settings of the open-source model) during the upload to Hugging Face (a third-party AI code hosting repository and company), which might have resulted in performance inferior to HyperWrite's "internal API" version.

Artificial Analysis later stated that they had access to the private API and witnessed impressive performance, but not at the level initially claimed. Since this test was conducted on a private API, they could not independently verify what they were testing.

The organization raised two key questions, severely questioning HyperWrite and Shumer's initial performance claims:

- Why the released version was not the one they tested through the Reflection private API.

- Why the model weights of the version they tested have not been released.

Meanwhile, users from multiple machine learning and AI communities on Reddit also questioned the claimed performance and origin of Reflection70B. Some pointed out that according to model comparisons posted by third parties on Github, Reflection70B seems to be a variant of Llama3, not a variant of Llama-3.1, further casting doubt on Shumer and HyperWrite's initial claims.

This led to at least one X user, Shin Megami Boson, publicly accusing Shumer of "fraudulent behavior" in the AI research community on September 8 at 8:07 PM Eastern Time, along with a series of screenshots and other evidence.

Others accused the model of actually being a "wrapper" or application built on proprietary/closed-source competitor Anthropic's Claude3.

However, other X users came forward to defend Shumer and Reflection70B, with some also posting impressive performance results from their end.

Currently, the AI research community is awaiting Shumer's response to these fraud allegations and updated model weights on Hugging Face.

🚀 After the release of the Reflection70B model, its performance has been questioned, with test results failing to reproduce the initially claimed performance.

⚙️ HyperWrite's founder explained that a model upload issue led to reduced performance and called for attention to the updated version.

👥 The social media discussion on the model is heated, with both accusations and defenses, creating a complex situation.