Recently, OpenAI has launched the much-anticipated AI model, previously codenamed "Strawberry," now officially known as "o1-preview."

OpenAI promises that this new model will perform on par with doctoral students in challenging benchmark tasks such as physics, chemistry, and biology. However, preliminary test results indicate that this AI still has a long way to go before it can replace human scientists or programmers.

On social media, many users have shared their interactive experiences with the "OpenAI o1" AI, showing that the model still performs poorly on basic tasks.

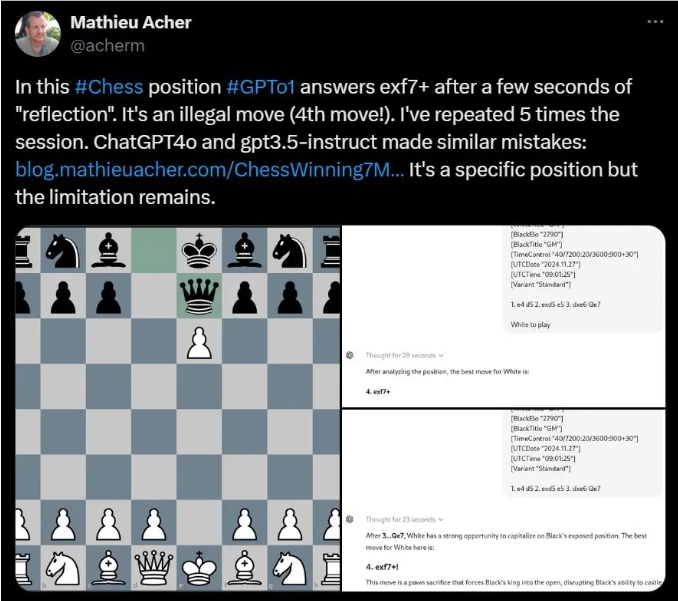

For example, researcher Mathieu Acher from INSA Rennes found that OpenAI o1 frequently proposes illegal moves when solving certain chess puzzles.

Meta AI scientist Colin Fraser pointed out that in a simple word puzzle about a farmer transporting sheep across a river, the AI abandoned the correct answer and instead provided some illogical gibberish.

Even in the logic puzzles used by OpenAI for demonstration, questions involving strawberries led users to different answers, with one user finding the model's error rate as high as 75%.

Furthermore, some users reported that the new model even frequently made mistakes in counting the number of times the letter "R" appears in the word "strawberry."

Although OpenAI stated at the launch that this is an early model without functionalities like web browsing or file uploading, such basic errors are still surprising.

To improve, OpenAI introduced a "chain of thought" process in the new model, making OpenAI o1 significantly different from the previous GPT-4o model. This method allows the AI to ponder repeatedly before arriving at an answer, although it also leads to extended response times.

Some users found that the model took 92 seconds to provide an answer to a word puzzle, yet the result was still incorrect.

OpenAI research scientist Noam Brown acknowledged that although the current response speed is slow, they expect future versions to think longer and even provide new insights on breakthrough issues.

However, renowned AI critic Gary Marcus remains skeptical, believing that prolonged processing does not necessarily lead to superior reasoning abilities. He emphasizes that despite the continuous development of AI technology, real-world research and experimentation are still indispensable.

It is evident that in practical use, OpenAI's new AI model still disappoints in various aspects, sparking discussions about the future development of AI technology.

Key Points:

🌟 Recently, OpenAI introduced a new AI model "Strawberry," claiming it can match doctoral students in complex tasks.

🤖 Many users found the AI frequently making mistakes on basic tasks, such as proposing illegal chess moves and incorrectly answering simple puzzles.

💬 OpenAI acknowledges that the model is still under development, but long thinking does not necessarily improve reasoning abilities, and many fundamental issues remain unresolved.