The Tongyi Qianwen team has announced the open-source release of the latest member of the Qwen family, the Qwen2.5 series language models, three months after the release of Qwen2. This marks one of the largest open-source releases in history, including the general-purpose language model Qwen2.5, as well as specialized models for programming and mathematics, Qwen2.5-Coder and Qwen2.5-Math.

The Qwen2.5 series models have been pre-trained on a massive dataset containing up to 18T tokens. Compared to Qwen2, the new models show significant improvements in knowledge acquisition, programming capabilities, and mathematical abilities. They support long text processing, capable of generating up to 8K tokens, and maintain support for more than 29 languages.

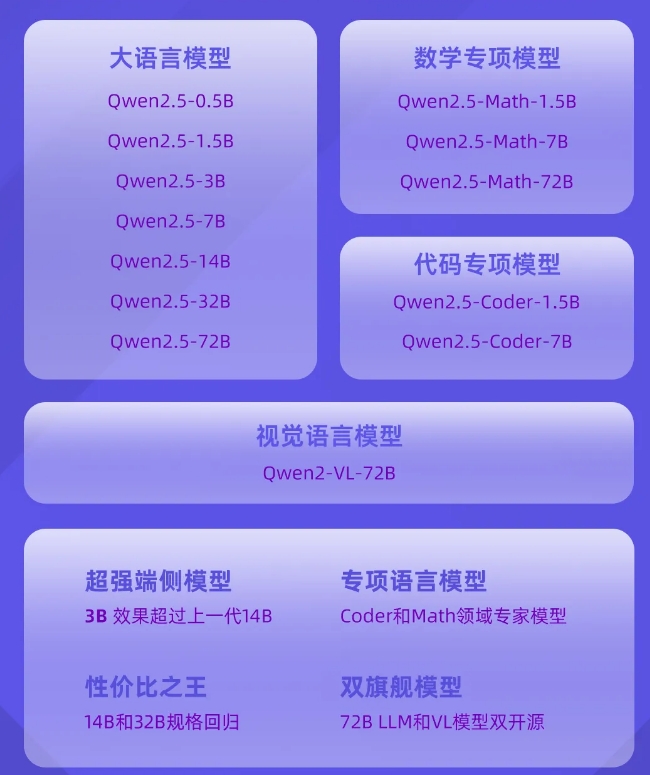

The open-source release of the Qwen2.5 series models not only adopts the Apache2.0 license but also offers various scale versions to meet different application needs. Additionally, the Tongyi Qianwen team has also open-sourced the Qwen2-VL-72B model, which matches the performance of GPT-4.

The new models have achieved significant improvements in instruction execution, long text generation, structured data understanding, and generating structured outputs. Especially in the fields of programming and mathematics, the Qwen2.5-Coder and Qwen2.5-Math models, trained on specialized datasets, demonstrate stronger capabilities in their respective domains.

Experience the Qwen2.5 Series Models:

Qwen2.5 Collection: https://modelscope.cn/studios/qwen/Qwen2.5