OpenAI announced on Tuesday that it will be rolling out the Advanced Voice Mode (AVM) of ChatGPT to more paid customers. This upgrade aims to make conversations more natural and fluid, with the initial beneficiaries being ChatGPT's Plus and Teams tier customers. Corporate and educational clients will gain access starting next week.

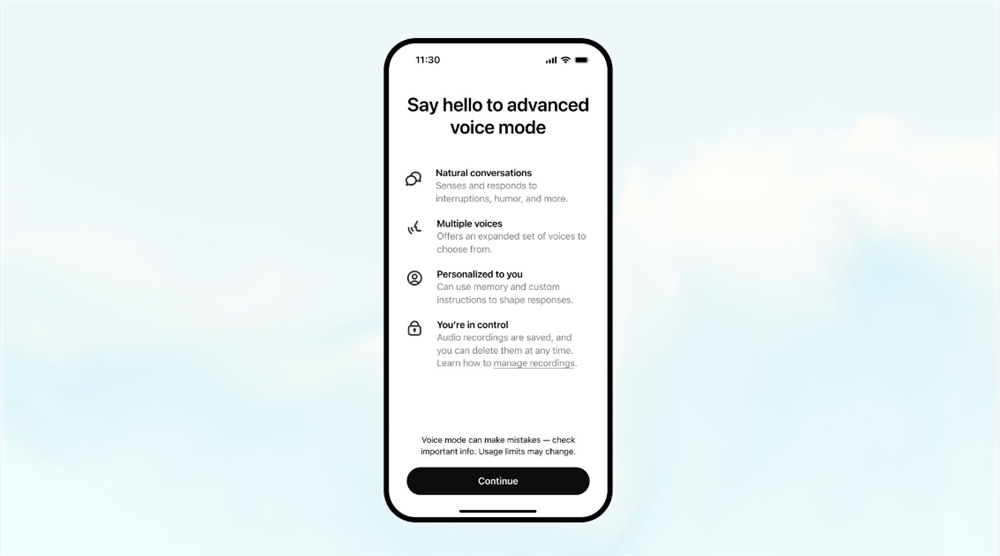

As part of this update, the interface design of AVM has also been optimized. The user interface now features a blue animated sphere to represent the feature, replacing the previously displayed animated black dots. When AVM is available, users will see a prompt window next to the voice icon in the ChatGPT application.

This upgrade also introduces five new voice options: Arbor, Maple, Sol, Spruce, and Vale. This brings the total number of ChatGPT voices to nine, including the previous Breeze, Juniper, Cove, and Ember. Notably, all these names are derived from nature, seemingly in line with the design philosophy of making ChatGPT's experience more natural.

However, the Sky voice, which was showcased in the spring update, is no longer in the lineup. This is due to legal threats from actress Scarlett Johansson, who claimed that the Sky voice was too similar to her character's voice in the film "Her." OpenAI quickly responded by removing the Sky voice and stated that it had never intended to mimic Johansson's voice.

OpenAI says it has made significant progress since the limited testing of AVM. According to the company, ChatGPT's voice capabilities now better understand various accents, making conversations smoother and faster. Although there were occasional glitches in early tests, the company claims that the situation has greatly improved.

Additionally, OpenAI has extended some of ChatGPT's personalized features to AVM. Users can now use custom instructions to personalize ChatGPT's response style and use memory functions to have ChatGPT remember conversation content for future reference.

It is worth noting that AVM is not yet available in several regions including the European Union, the UK, Switzerland, Iceland, Norway, and Liechtenstein. An OpenAI spokesperson said that users in these regions cannot access the feature for the time being.

Despite the many improvements brought by this update, the video and screen-sharing features first showcased in the spring update have not yet been rolled out. These features were originally intended to allow the system to process both visual and auditory information simultaneously, such as answering handwritten math questions or writing code in real-time. OpenAI has not yet announced a specific release schedule for these multi-modal features.