Google today announced the launch of an upgraded series of Gemini models, including Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002. This update not only significantly enhances performance but also introduces a surprising price discount, undoubtedly sparking a wave of enthusiasm in the AI development community.

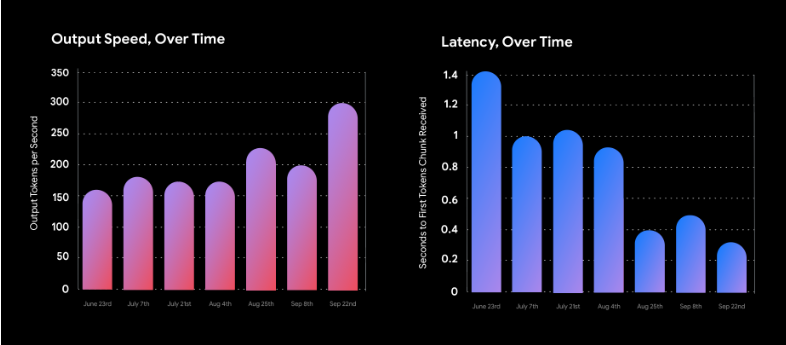

First and foremost, the most eye-catching aspect is the substantial price reduction. The cost of using the new models has been halved, with a decrease of over 50%. Meanwhile, performance has been notably improved. The processing speed of Gemini1.5Flash has doubled, and 1.5Pro has nearly tripled. This means developers can achieve faster outputs and shorter delays at a lower cost, greatly enhancing development efficiency.

In terms of performance, the new Gemini models show comprehensive improvements. Especially in mathematics, long text processing, and visual tasks, the progress is particularly significant. For example, the model can now easily handle PDF documents exceeding 1,000 pages, answer questions containing tens of thousands of lines of code, and even extract useful information from an hour-long video. In the challenging MMLU-Pro benchmark test, the new model achieved a performance improvement of about 7%. More remarkably, in the MATH and HiddenMath benchmarks, the improvement was as high as 20%.

Google has also optimized the response quality of the models. The new version provides more helpful and concise answers while ensuring content safety. In tasks such as summarization, question answering, and information extraction, the output length has been shortened by 5% to 20%, which not only improves efficiency but also further reduces usage costs.

For enterprise users, the long text processing capabilities (up to 2 million words) and multimodal capabilities of Gemini1.5Pro open up new application scenarios. Starting from October 1, 2024, the prices for input tokens, output tokens, and incremental cache tokens will be reduced by 64%, 52%, and 64% respectively, which will undoubtedly significantly reduce the cost of enterprise use of AI.

In terms of usage limits, Google has also made significant adjustments. The paid service rate limit for Gemini1.5Flash has been increased to 2,000 requests per minute, and 1.5Pro to 1,000, providing developers with greater flexibility.

Security has always been a key focus area for Google. The new model further enhances safety while following user instructions. Google has also adjusted the default settings of filters, giving developers more autonomy.

Additionally, Google has introduced an improved version of the Gemini1.5Flash-8B experimental model, which performs exceptionally well in text and multimodal application scenarios. This version has been released on Google AI Studio and Gemini API, offering developers more options.

For Gemini Advanced users, they will soon be able to experience the chat-optimized Gemini1.5Pro-002 version.