In the rapidly evolving field of artificial intelligence, a multimodal large language model known as ORYX is quietly revolutionizing our understanding of AI's capability to perceive the visual world. Developed jointly by researchers from Tsinghua University, Tencent, and Nanyang Technological University, this AI system is a veritable "Transformer" in the realm of visual processing.

ORYX, short for Oryx Multi-Modal Large Language Models, is specifically designed to handle image, video, and 3D scene temporal understanding. Its core advantage lies in its ability to comprehend visual content and discern the relationships and underlying narratives behind it, much like humans do.

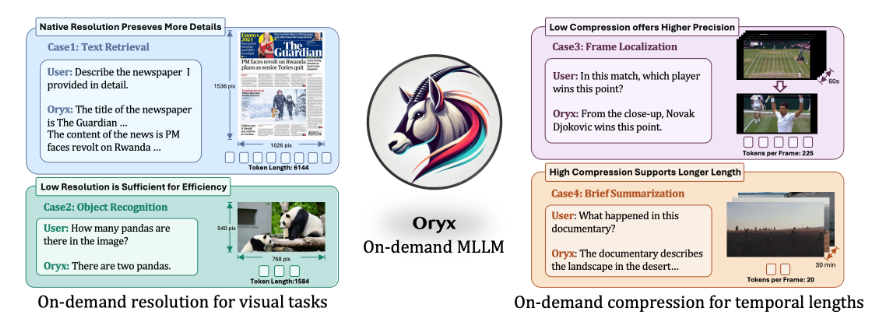

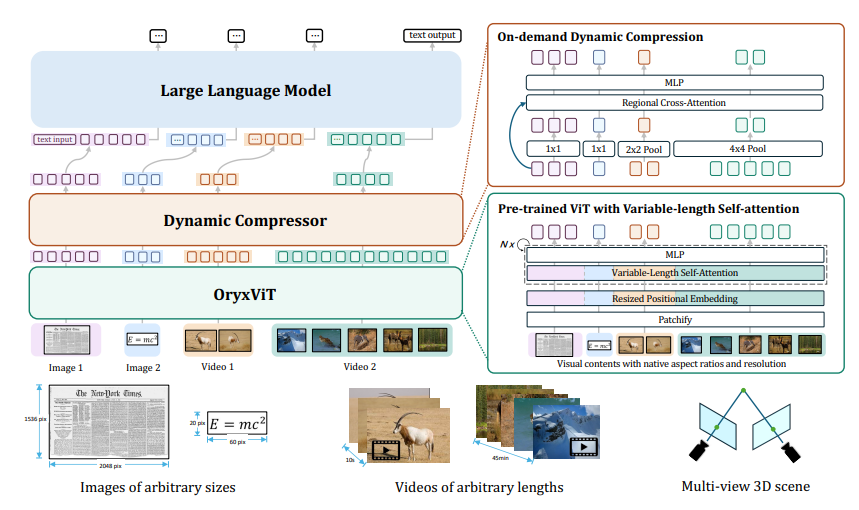

One of the standout features of this AI system is its capability to process visual inputs of any resolution. Whether it's a blurry old photograph or a high-definition video, ORYX can handle it with ease, thanks to its pre-trained model, OryxViT, which converts images of varying resolutions into a unified format understandable by AI.

Even more impressive is ORYX's dynamic compression ability. In the face of lengthy video inputs, it can intelligently compress information, preserving key content without distortion. It's akin to condensing a weighty tome into a content-rich note card, retaining core information while significantly enhancing processing efficiency.

The operation of ORYX primarily relies on two core components: the visual encoder OryxViT and the dynamic compression module. The former handles diverse visual inputs, while the latter ensures that large-capacity data such as lengthy videos can be processed efficiently.

In practical applications, ORYX has demonstrated remarkable potential. It can not only deeply understand video content, including objects, plots, and actions, but also accurately grasp the positions and relationships of objects in 3D space. This comprehensive visual understanding capability opens up infinite possibilities for future human-computer interaction, intelligent surveillance, autonomous driving, and more.

It is worth noting that ORYX has performed exceptionally well in multiple visual-language benchmark tests, especially in spatial and temporal understanding of images, videos, and multi-view 3D data, showcasing a leading edge.

The innovation of ORYX lies not only in its powerful processing capabilities but also in its establishment of a new paradigm for AI visual understanding. It can process visual inputs at native resolution and efficiently handle long videos through dynamic compression technology, a flexibility and efficiency unmatched by other AI models.

With ongoing technological advancements, ORYX is poised to play an increasingly significant role in the future of AI. It will not only help machines better understand our visual world but may also provide new insights for the simulation of human cognitive processes.

Paper link: https://arxiv.org/pdf/2409.12961