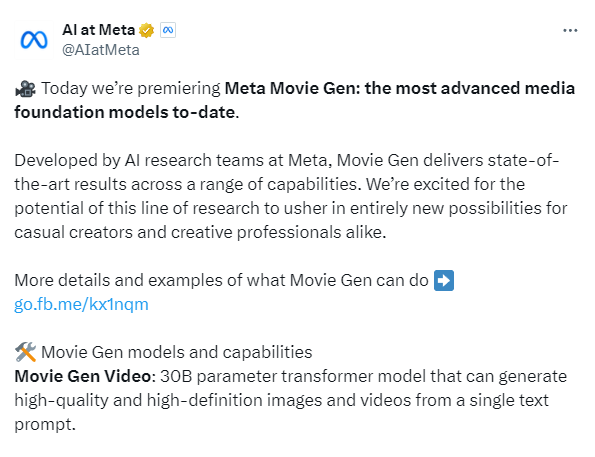

Meta has recently released Movie Gen, an AI video generation model dubbed the "Metaverse version of Sora." This model can create high-quality videos with a single click, add voiceovers, edit and splice videos, and even transform personal photos into personalized videos.

With the simultaneous release of a 92-page technical report, the powerful features and advanced architecture of Movie Gen have attracted widespread attention in the industry.

Movie Gen Video: A Revolution in High-Definition Video Generation

Movie Gen consists of two core models: Movie Gen Video and Movie Gen Audio. The Movie Gen Video, a Transformer model with 300 billion parameters, can generate high-definition videos at 1080P resolution, 16 seconds long, and 16 frames per second based on text prompts.

Key Features:

Text-to-Video: Create high-quality customized videos with simple text input

Video Editing: Precisely modify the style and content of existing videos

Personalized Videos: Transform personal photos into dynamic videos

Audio Generation: Add voiceovers, sound effects, and background music to videos

The model incorporates the architectural design of Llama3 and employs "flow matching" technology, surpassing traditional diffusion models in video accuracy and detail representation.

From the demonstration, the videos generated by Movie Gen achieve extremely high standards in image quality, lighting effects, and motion smoothness. The facial stability of characters, the realism of animal fur, and the richness of background details are all impressive. The audio generation is equally outstanding, capable of creating background music that matches the scene's atmosphere and accurately syncing with video action points.

Movie Gen Audio: A Breakthrough in Synchronized Audio Generation

Movie Gen Audio is a model with 13 billion parameters, capable of generating high-quality voiceovers and music at 48kHz for videos. It can not only produce synchronized sound effects but also create background music that matches the scene's atmosphere, and even produce continuous audio for several minutes.

Personalized Videos: Creating Unique Content

In terms of functionality, Movie Gen demonstrates remarkable diversity and flexibility. Users can generate customized videos with simple text input, edit the style and content of existing videos, and even upload personal photos to create unique personalized videos. These features make Movie Gen one of the most advanced media foundation models currently available.

Meta's demonstration videos are impressive. From a stormy mountain scene to a little girl flying a kite on the beach, to a sloth wearing pink sunglasses, the videos generated by Movie Gen achieve extremely high standards in image quality, lighting effects, and motion smoothness.

Even more astonishing is its ability to transform ordinary photos into dynamic videos, such as turning a photo of Zuckerberg into a fitness video.

Technically, Movie Gen incorporates several innovations:

Transformer architecture based on Llama3

Flow matching training method to enhance video quality

Multi-stage training process to optimize performance

Llama3-assisted prompt rewriting to improve generation quality

Innovative video editing and audio expansion techniques

Although Movie Gen is currently in a "futures" state and is expected to be open to the public next year, its release has already caused a significant stir in the industry. Some commentators believe that Meta's move not only preempts OpenAI in releasing a product similar to Sora but may also spur other companies to accelerate the development of next-generation AI video technologies.

Reference: https://x.com/AIatMeta/status/1842188252541043075

Official Website: https://ai.meta.com/research/movie-gen/