Recently, researchers from the Mira Institute, Google DeepMind, and Microsoft Research conducted an in-depth investigation into the reasoning capabilities of AI language models, finding significant shortcomings in small and inexpensive models when solving complex problems.

This study focused on a test named "Combined GSM," designed to evaluate these models' performance in solving chain-based elementary math problems.

Image source note: The image is AI-generated, authorized by the service provider Midjourney

The researchers combined two problems from the GSM8K dataset, using the answer from the first problem as a variable in the second for testing. The results showed that most models performed far below expectations on these complex reasoning tasks, especially pronounced in smaller models. Although small models scored similarly to larger models on standard math tests like GSM8K, their logical gaps widened significantly in the new combined test, reaching up to 2 to 12 times.

Take GPT-4o mini as an example; it significantly lagged behind GPT-4o in the new test, despite being nearly equivalent in the original benchmark. Other models such as Gemini and LLAMA3 showed similar patterns. The study indicates that while these small models can recognize surface patterns in common tasks, they struggle to apply this knowledge in new contexts.

The research also found flaws in even models specifically designed for mathematics. For instance, Qwen2.5-Math-7B-IT scored over 80% on high-difficulty high school math problems but was less than 60% accurate on chain-based elementary math problems. For smaller models, instruction tuning methods significantly improved performance in the original GSM8K test but offered minimal enhancement in the combined GSM test.

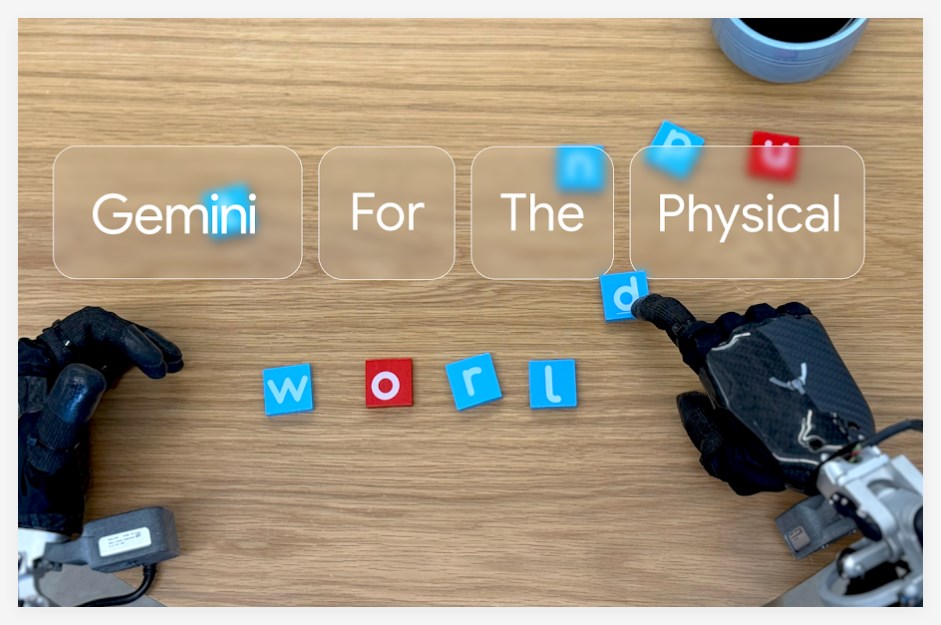

This study is not entirely up-to-date, as OpenAI's recently launched logical optimization model o1 was not included in the test. Although there are indications that o1 has significantly improved planning capabilities, the research shows that humans still have the upper hand in solving math problems in terms of speed and elegance. Google's Gemini model has also shown stronger math abilities after recent updates.

The researchers emphasize that existing evaluation methods may mask these models' systemic differences, leading to overestimations of small models' capabilities. They call for a reevaluation of the development strategies for low-cost AI systems, questioning the inherent limitations of these models in complex reasoning and generalization. This study provides deeper insights into understanding the limitations of AI systems.

Key Points:

📉 Small AI language models perform poorly in solving chain-based math problems, with logical gaps up to 12 times higher.

🧮 Even models specifically designed for mathematics have accuracy rates below 60% on basic problems.

🔍 Existing evaluation methods may overestimate the capabilities of small models; their development strategies need reexamination.