In the realm of AI, data is akin to gold ore, the richer and more dazzling it is. Recently, LLM360 has launched an impressive dataset called TxT360, tailor-made for large language model training. This colossal dataset not only includes high-quality text data from various industries but has also undergone a global deduplication effort, ultimately amassing 5.7 trillion high-quality tokens, truly earning the title of "the treasure chest of the data world"!

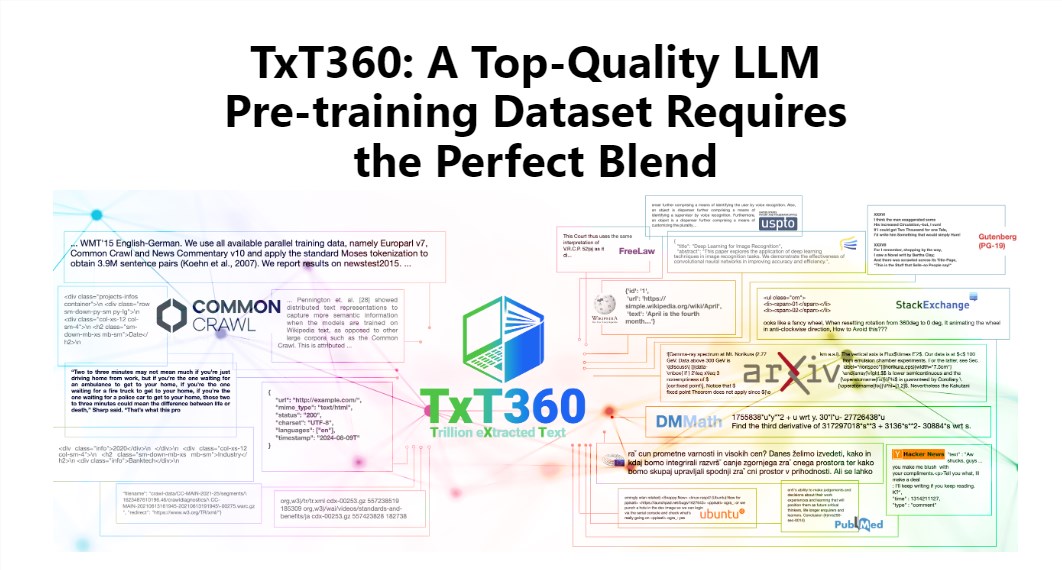

The allure of TxT360 lies in its enormous scale and exceptional quality, surpassing existing datasets like FineWeb and RedPajama. This dataset has extracted the essence of the internet from 99 Common Crawl snapshots and additionally selected 14 high-quality data sources, such as legal documents and encyclopedias, ensuring its content is not only rich and diverse but also highly reliable.

Even cooler, TxT360 offers users a "data weighting adjustment recipe," allowing you to flexibly adjust the weights of different data sources according to your needs. It's like cooking where you can freely mix various ingredients according to taste, ensuring every bite is delicious.

Of course, the deduplication technology is also a highlight of TxT360. Through complex deduplication operations, this dataset effectively addresses data redundancy and information repetition issues during training, ensuring that each token is unique. Additionally, the project team has cleverly removed personal identification information, such as emails and IP addresses, from documents using regular expressions, thereby ensuring data privacy and security.

TxT360's design not only focuses on scale but also quality. By combining the advantages of web data and selected data sources, it allows researchers to precisely control the use and distribution of data, as if they have a magic remote control to adjust the data ratio at will.

In terms of training effectiveness, TxT360 is no less competitive. It has significantly increased the amount of data through a simple upsampling strategy, ultimately creating a dataset exceeding 15 trillion tokens. On a series of key evaluation metrics, TxT360 outperforms FineWeb, especially in areas like MMLU and NQ, demonstrating exceptional learning capabilities. When combined with code data (such as Stack V2), the learning curve becomes more stable, and model performance has seen a noticeable improvement.

Detailed introduction: https://huggingface.co/spaces/LLM360/TxT360