The AI community has gone wild recently. First, ChatGPT led us in word games, and now something even more impressive has emerged: AI video generation models that are set to revolutionize the film industry! Today, I'm here to show you what this so-called Hollywood-disrupting AI video generation is really capable of.

VideoGen-Eval assessed existing models across multiple dimensions including text-video consistency, scene composition, transitions, creativity, stylization, stability, and motion diversity, highlighting the strengths and weaknesses of each model.

A comprehensive comparison of major video models is as follows:

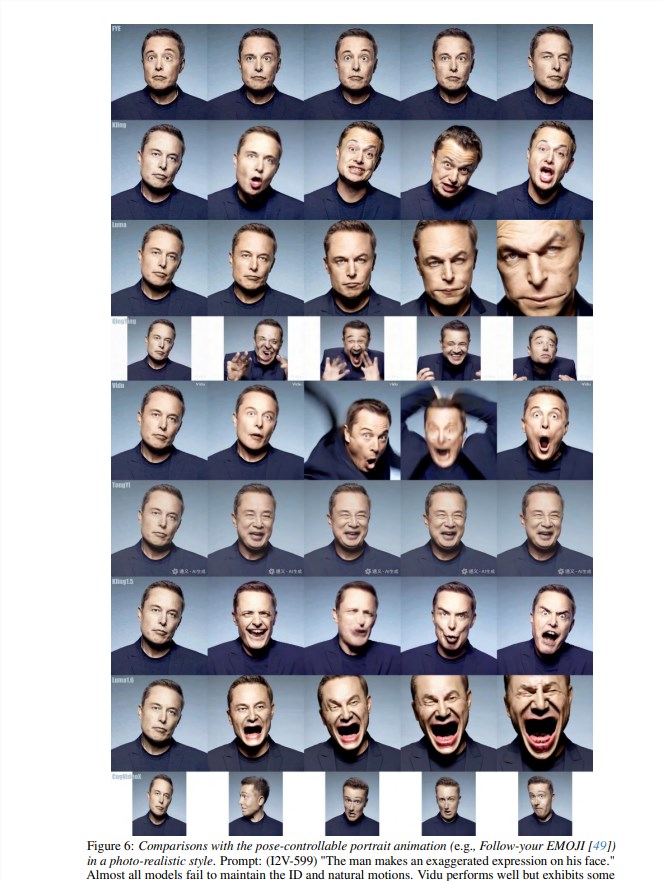

In text-to-video (T2V) generation, Gen-3, Kling v1.5, and Minimax excel, with Minimax particularly standing out in text control, especially in character expressions, camera movements, multi-camera generation, and subject dynamics.

Gen-3 performs exceptionally in controlling lighting, textures, and cinematographic techniques, while Kling v1.5 strikes a good balance between visual effects, controllability, and motion capabilities.

Pika1.5 is notable in generating specific video effects such as inflation, melting, and explosions.

To put it simply, AI video generation models work by feeding them text or images, and they produce a video. Imagine, in the future, making movies without hiring actors, just throwing the script to AI and waiting to cash in?

Dream on! It's not that simple. To understand the true capabilities of these AI models, researchers from the Chinese University of Hong Kong and Tencent initiated a project called "VideoGen-Eval" to assess these models' real-world performance, finding that the situation is more complex.

Currently, AI video generation models on the market are mainly divided into closed-source and open-source. Closed-source models are like the secret recipes of high-tech companies, such as Runway's Gen-3 and LumaLabs' Dream Machine, which typically offer higher video quality and more powerful features, but at a cost. Open-source models, on the other hand, are like martial arts manuals in the open, such as Open-Sora and EasyAnimate, which may not match the quality of closed-source models but are free and open to everyone.

Comparison videos are as follows:

Prompt: Static camera, a glass ball rolls on a smooth tabletop

Prompt: FPV aerial shot, the sunshine shines on the snow-capped mountains, a quiet atmosphere

Prompt: Zooming in hyper-fast to a red rose and showcase the details of its petals

The "VideoGen-Eval" project tested various AI video generation models, including text-to-video (T2V), image-to-video (I2V), and video-to-video (V2V). The results showed that while these models have made significant progress in aspects such as image quality, natural motion, and text description matching, they are still far from perfect.

On the positive side, AI can now generate simple videos. For example, if you input "A teddy bear walking in a supermarket with a counterclockwise rotating camera," AI can produce a video of a rotating teddy bear. Sounds magical, right? However, if you want AI to generate complex scenes, such as "A person swimming in a pool with splashing water," or "Three monkeys jumping in a forest with two parrots flying among the trees," AI starts to struggle.

The main reason is that AI's understanding of physical laws, spatial relationships, and object properties is still inadequate. For instance, if you ask AI to generate a video of "A glass ball rolling on a table," AI might not know how the glass ball should roll according to physical laws, resulting in a strange video.

Additionally, AI faces significant challenges in handling fast motion, character expressions, and multi-character interactions. For example, if you want AI to generate a baseball game video, the resulting footage might be bizarre, with completely uncoordinated movements, and even the baseball flying off into the distance.

Let alone those scenarios that require AI to exercise imagination and creativity. For example, if you want AI to generate a video of "A person surrounded by colorful smoke," AI might just produce a mess of colors, making it unclear what it is. In summary, all models currently fall short of perfection, facing significant challenges in handling complex movements, multi-object interactions, physical simulations, semantic understanding, and precise control.

To view the complete evaluation video, click here: https://ailab-cvc.github.io/VideoGen-Eval/#text-to-video