Recently, the creative team at Alibaba's Mama has released FLUX.1-Turbo-Alpha, an 8-step distillation Lora model trained on the FLUX.1-dev framework.

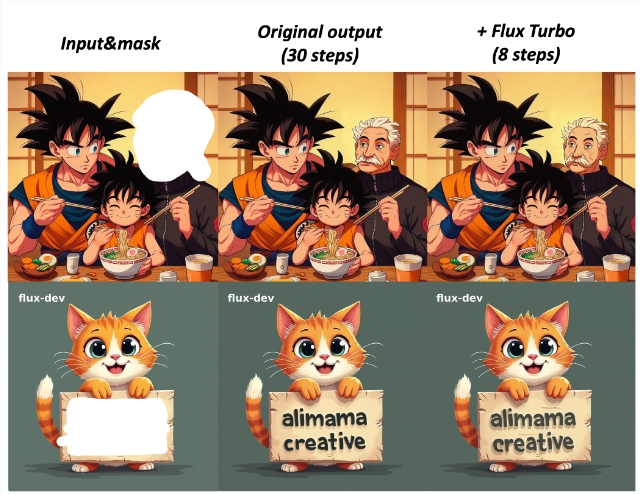

This model employs a multi-head discriminator, significantly enhancing the quality of distillation, and supports various FLUX-related applications such as text-to-image generation and inpainting control networks. The team recommends setting the guidance scale to 3.5 and the Lora scale to 1 when using the model, with plans to release versions with fewer steps in the future.

FLUX.1-Turbo-Alpha can be directly integrated with the Diffusers framework. Users can load the model and generate desired images with just a few lines of code. For example, you can create an amusing scene: a smiling sloth wearing a leather jacket, cowboy hat, plaid skirt, and bowtie, standing in front of a sleek Volkswagen van painted with a cityscape. With simple parameter adjustments, high-quality images can be generated at a resolution of 1024x1024.

Additionally, the model is compatible with ComfyUI, enabling rapid text-to-image workflows or more efficient generation effects in inpainting control networks. Through this technology, the generated images closely follow the original output, enhancing the user's creative experience.

The training process of FLUX.1-Turbo-Alpha is equally remarkable. The model was trained on over 1 million images from open-source and internal resources, with an aesthetic score above 6.3 and resolutions exceeding 800. The team employed adversarial training methods to improve image quality and added a multi-head design to each transformer layer. The training process fixed the guidance scale at 3.5, with a time offset of 3, using mixed precision bf16, a learning rate of 2e-5, a batch size of 64, and an image size of 1024x1024.

The release of FLUX.1-Turbo-Alpha marks another breakthrough for Alibaba's Mama in the field of image generation, further promoting the普及 and application of artificial intelligence technology.

Project entry: https://huggingface.co/alimama-creative/FLUX.1-Turbo-Alpha

Key points:

🌟 This model is based on FLUX.1-dev, utilizing 8-step distillation and a multi-head discriminator to enhance image generation quality.

🖼️ Supports text-to-image generation and inpainting control networks, allowing users to easily create a variety of interesting scenes.

📊 The training process uses adversarial training, with over 1 million images in the training data, ensuring high-quality model outputs.